My Clean Code Experience No. 1 (with before and after code examples)

Public Code Review

Robert C. Martin was kind enough to review the code in this post at on his new blog Clean Coder. Be sure to read his review when you finish reading this post.

Introduction

After expressing an interest in reading Robert C Martin‘s books, one of my Twitter followers was kind enough to give me a copy of Uncle Bob’s book Clean Code as a gift*. This post is about my first refactoring experience after reading it and the code resulting from my first Clean Code refactor.

Sample code

The code used in this post is based on the data access layer (DAL) used in a side project I’m currently working on. Specifically, my sample project is based on a refactor on the DAL classes for comment data. The CommentData class and surrounding code was simplified for the example, in order to focus on the DAL’s refactoring, rather than the comment functionality. Of course; the comment class could be anything.

Download the my clean code refactor sample project (VS2008)

Please notice:

1. The database can be generated from the script in the SQL folder

2. This code will probably make the most sense if you step through it

3. This blog post is about 1,700 words, so if you aren’t into reading, you will still get the jist of what I’m saying just from examining the source code.

What Clean Code isn’t about

Before starting, I want to point out that Clean Code is not about formatting style. While we all have our curly brace positioning preferences, it really is irrelevant. Clean Code strikes at a much deeper level, and although your ‘style’ will be affected tremendously, you won’t find much about formatting style.

My original code

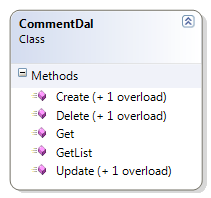

My original comment DAL class is in the folder called Dirty.Dal, and contains one file called CommentDal.cs containing the CommentDal class. This class is very typical of how I wrote code before reading this book**.

The original CommentDal class is 295 lines of code all together and has a handful of well named methods. Now, 295 lines of code is hardly awful, it doesn’t seem very complex relatively speaking, and really, we’ve all seen (and coded) worse. Although the class interface does seem pretty simple, the simplicity of its class diagram hides its code complexity.

public static void Create(IEnumerable<CommentData> Comments, SqlConnection cn)

{

// validate params

if (null == cn) throw new ArgumentNullException("cn");

if (cn.State != ConnectionState.Open) throw new ArgumentException("Invalid parameter: connection is not open.", "cn");

if (null == Comments) throw new ArgumentNullException("Comments");

foreach (CommentData data in Comments)

{

if (data.CommentId.HasValue)

throw new ArgumentNullException("Create is only for saving new data. Call save for existing data.", "data");

}

// prepare command

using (SqlCommand cmd = cn.CreateCommand())

{

cmd.CommandText = "ins_comment";

cmd.CommandType = CommandType.StoredProcedure;

// add parameters

SqlParameter param = cmd.Parameters.Add("@comment_id", SqlDbType.Int);

param.Direction = ParameterDirection.Output;

cmd.Parameters.Add("@comment", SqlDbType.NVarChar, 50);

cmd.Parameters.Add("@commentor_id", SqlDbType.Int);

// prepare and execute

cmd.Prepare();

// update each item

foreach (CommentData data in Comments)

{

try

{

// set parameter

cmd.Parameters["@comment"].SetFromNullOrEmptyString(data.Comment);

cmd.Parameters["@commentor_id"].SetFromNullableValue(data.CommentorId);

// save it

cmd.ExecuteNonQuery();

// update the new comment id

data.CommentId = Convert.ToInt32( cmd.Parameters["@comment_id"].Value);

}

catch (Exception ex)

{

string msg = string.Format("Error creating Comment '{0}'", data);

throw new Exception(msg, ex);

}

}

}

}

This method can be simplified dramatically into a more readable style with fewer control statements.

But first, notice how the methods are segmented into line groupings which are similar, with each grouping isolated with a single line of white space both before & after it, plus a comment to prefix most groupings. Each of these groupings is a smell, indicating each should be its own method.

Before reading Clean Code, this was clean to me … this was beautiful code to me.

My new ‘clean’ code

I’ve got a feeling I missed a lot in this book and will probably end up rereading it several times, but the biggest takeaways from reading it in my first pass were:

Smaller well named classes & methods are easier to maintain and read. You may notice in the Clean.Dal directory, the classes are smaller, with file sizes hovering around the 50 LOC mark. 50 LOC for an entire class, when in the past, only my smallest methods would be less than 50 LOC. I’ve now realized; no code grouping is too small to separate into its own property, method, or even class***. Sometimes it’s wise to refactor a single expression into a property just to label it****.

Here is the equivalent of my new Create method:

public static void Execute(IEnumerable<CommentData> comments, int userId, SqlConnection cn)

{

ThrowExceptionIfExecuteMethodCommentsParameterIsInvalid(comments);

using (CommentInsertCommand insCmd = new CommentInsertCommand(cn))

{

foreach (CommentData data in comments)

data.CommentId = insCmd.Execute(data, userId);

}

}

From the above code, you may notice not only how much smaller and simpler the ‘Create’ method has become, but also that its functionality has been moved from a method to its own smaller class. The smaller class is focused on its single task of creating a comment in the database and is therefore not only easier to maintain, but will only require maintaining when a very specific change in functionality is requested, which reduces the risk of introducing bugs.

The small class / property / method idea extends to moving multi-line code blocks following control statements into their own methods.

For example:

while(SomethingIsTrue())

{

blah1();

blah2();

blah3();

}

Is better written as

while (SomethingIsTrue())

BlahBlahBlah():

With the ‘while’s block moved into its own BlahBlahBlah() method. It almost makes you wonder if having braces follow a control statement is a code smell, doesn’t it? *****

Also, as part of the small well named methods idea, detailed function names make comments redundant & obsolete. I’ve come to recognize most comments are a code smell. Check this out; my colleague Simon Taylor reviewed my code while I was writing this, pointed out that although my dynamic SQL was safe, colleagues following me may not see the distinction of what was safe, and may add user entered input into the dynamic SQL. He suggested a comment for clarification.

He was absolutely right, but instead of adding a comment, I separated it into its own method, which I believe makes things very clear. See below:

protected override string SqlStatement

{

get

{

return GenerateSqlStatementFromHardCodedValuesAndSafeDataTypes();

}

}

protected string GenerateSqlStatementFromHardCodedValuesAndSafeDataTypes()

{

StringBuilder sb = new StringBuilder(1024);

sb.AppendFormat(@"select comment_id,

comment,

commentor_id

from {0} ",

TableName);

sb.AppendFormat("where Comment_id={0} ", Filter.Value);

return sb.ToString();

}

Not only is this less likely to go stale, it will also clearly identify exactly what is going on both at the method declaration and everywhere it is called.

Moving control flow to the polymorphic structure is another technique to achieve clean code. Notice the ‘if’s in the ‘Clean.Dal’ version are pretty much reserved for parameter validation. I’ve come to recognize ‘if’s, especially when they deal with a passed in Boolean or Enum typed method parameters as a very distinct code smell, which suggests a derived class may be more appropriate.

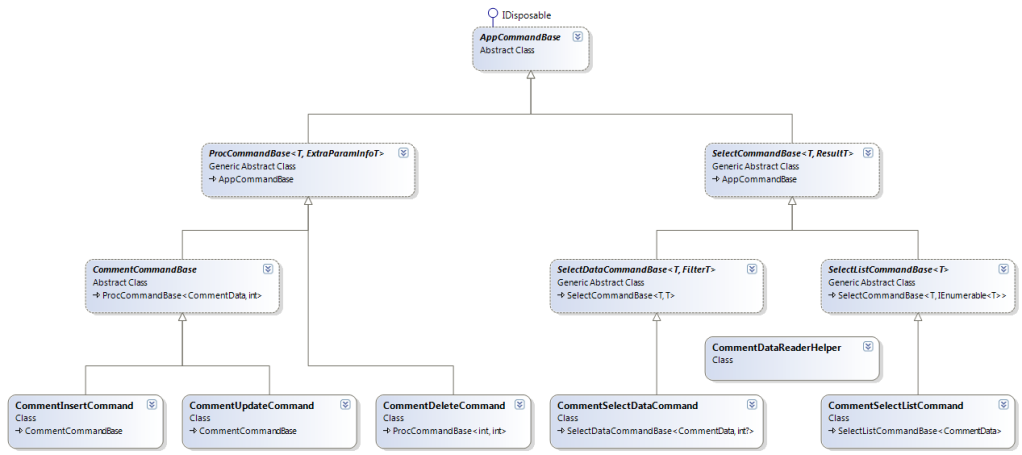

Reusable base classes are also a valuable by-product of writing clean code. A reusable class library is not the goal in itself, in an anti-YAGNI kind of way, but is instead a natural side effect of organizing your code properly.

Clean code is also very DRY. There is very little if any duplicated code.

The structure is 100% based on the working, existing, code, and not on some perceived structure based on real world domain is-a relationships which don’t always match up perfectly.

One more thing, unfortunately not everything is going to fit nicely into the object oriented structure, and sometimes a helper class or extension method will be the best alternative. See the CommentDataReaderHelper class as an example.

A closer look

Here’s a quick overview of class hierarchy, we’ll use CommentUpdateCommand as an example:

One of the first things you may notice about this class, is there only one entry point; the static method Execute(). This provides a very simple and obvious way to use the class. You can place a breakpoint in this method and step through the class and its hierarchy to fully understand it.

The class hierarchy is based completely on existing working code and was designed to share functionality effectively without duplicating code. Each class is abstracted to the point it needs to be, and no more, yet, the duties of each class in the hierarchy is crystal clear as if it was designed from the beginning to look like this.

Here are the classes,

The AppCommandBase class manages an SqlCommand object.

The ProcCommandBase class executes a given stored procedure.

The CommentCommandBase class shares functionality specific to the Comment table

The CommentUpdateCommand class implements functionality specific to the comment update stored procedure and hosts the static entry point method ‘Execute’.

The cost of writing Clean Code

When I started writing this, I didn’t know there were any downsides to writing clean code, but once I began this post, and started gathering evidence to prove how awesome the clean code strategy was, some evidence ruled against my initial euphoria. Here is a list of observations which could be looked upon unfavorably.

Increased LOC – This was really pronounced in a later experience (Clean Code Experience No. 2 coming soon), but I actually thought the LOC was decreased in this sample. At least once you take into account the reusable base classes … but it wasn’t. While I haven’t done an in depth analysis of the LOC, it appears that the code specific to the comments, before taking into account any abstract base classes, has a similar LOC count as the Dirty.Dal class. Now much of this is from structure code, like method declarations, but it was still disappointing.

Increased structural complexity – I suppose this should have been obvious, but it didn’t occur to me until writing this blog post, the complexity didn’t really disappear; it’s just been moved from the code to the polymorphic structure. However, having said that, I suspect, maintaining Clean Code with a vigorous polymorphic structure would be lower cost than a traditional code base.

Method call overhead – With all the classes, properties, and methods, it has occurred to me, that method call overhead may have a performance trade off. I asked Uncle Bob about this on Twitter, and his reply was “Method call overhead is significant in deep inner loops. Elsewhere it is meaningless. Always measure before you optimize.”. Pretty good advice I think.

But even after realizing the trade offs, Clean Code is still, clearly, the way to go.

In Summary

The Clean Code book has changed my outlook on how I write, and I think everyone should be exposed to this material. I’ve already re-gifted my Clean Code book, and the drive to write this blog post comes from a burning desire to share this information with my new colleagues.

I would love to hear your input on this blog post, my sample code, and the whole Clean Code movement. Please comment below.

Reminder

Don’t forget to read Uncle Bob’s review at on his new blog Clean Coder.

* Why did somebody I’ve never met send me a book as a give? Because he wanted me in the C.L.U.B. (Code Like Uncle Bob). And to answer your next question, yes I have since re-gifted the book.

** Very typical of how I wrote code before reading Clean Code … with the exception of some pretty nasty exception handling code. I removed it not due to its ugliness, but because its ugliness may have taken away from the code logic refactoring that I am trying to emphasize.

*** And when I say no code is too small, stay tuned for Clean Code Experience No 2, which was so unconventional, it left me questioning if I’d taken this concept way too far.

**** Although to be fair, I my first exposure to this idea was from Scott Hanselman after reading his first MVC book (this link is to his second MVC book).

***** Wrap your head around that, those of you who feel a single line following a control statement should have braces. LOL

Copyright © John MacIntyre 2010, All rights reserved

PS-Big thanks to Ben Alabaster to pushing me to write this post.

How To Get The Most Frequently Used Column Values

Whenever I import external data, integrate to another database, or am new to a project, I need to get familiar with the database. The table schemas, relational integrity, and constraints are the first thing I look at and take me a long way, but soon I need to know what the data looks like.

In an ideal world, relational integrity and database constraints would define control this, and all I’d really need to do is look at those. But the reality is, in 15 years of working in this industry, most of the databases I’ve worked on, that I didn’t design, have barely used constraints and some haven’t even used relation integrity fully!

The need to get a good feel of the data is even more prevalent when working with dirty data, or when refactoring poorly written applications to ensure any refactoring doesn’t introduce other issues. I will usually wind up writing the following query repeatedly:

Select column_name, count(*) From table_name Group by column_name Order by count(*) desc, column_name

This little query often reveals; inconsistencies between data and the application, where an application sets column X to possible values of ‘A’, ’B’, ‘C’, ‘D’, or ‘E’, but in reality, there may be zero ‘C’ and ‘E’ values in that column, but there is 6 ‘X’s, 1 ‘Q’, and an ‘?’. Or I may find that there are only 6 rows with data in that column, out of almost 3 million rows, indicating the column / application feature is unused.

Anyway, yesterday I finally wrote a little stored procedure which will print out the most frequent N values in each column for a specified table.

/* Purpose : Retrieves the top N most frequent values in each column of the specified data table. Copyright © Jaten Systems Inc. 2009, All rights reserved http://www.jatensystems.com/ https://whileicompile.wordpress.com/ http://www.johnmacintyre.ca/ */ create proc jaten_diag_GetTableContents (@tblName nvarchar(200), @rowCount int) as begin declare @colId int; declare @colName nvarchar(100); declare @sql nvarchar(2048); declare @tblRowCount int; -- cursor to get columns DECLARE curColumns CURSOR FAST_FORWARD FOR SELECT colid, [name] FROM syscolumns where id=object_id(@tblName) order by colid open curColumns; -- get table row count set @sql = 'select @retCount=count(*) from ' + @tblName; exec sp_executeSQL @sql, N'@retCount int OUTPUT', @retCount = @tblRowCount OUTPUT; -- print table header print ''; print '---------------------------------'; print '--- ' + @tblName; print '--- Row count : ' + cast(@tblRowCount as nvarchar) print '---------------------------------'; -- get info for each column fetch next from curColumns into @colId, @colName; while 0 = @@fetch_status begin -- print column header print ''; print '---------------------------------'; print '--- Column [' + cast(@colId as nvarchar) + '] - ' + @colName + ' ---'; -- compile & execute grouping sql select @sql = 'select top ' + cast(@rowCount as nvarchar) + ' count(*) as [count], ' + ' cast(((count(*) * 100)/' + cast( @tblRowCount as nvarchar) + ') as nvarchar) + ''%'' as [Percentage], ' + ' [' + @colName + '] as [col_value] ' + 'from ' + @tblName + ' ' + 'group by [' + @colName + '] ' + 'order by count(*) desc, [' + @colName + ']'; exec sp_executeSQL @sql; --print @sql; -- next fetch next from curColumns into @colId, @colName; end -- clean up close curColumns; deallocate curColumns; end

Please note 2 things :

1. You need to run it with ‘Results to Text’ or ‘Results to File’ setting.

2. The table parameter will need square brackets if the table name uses unconventional characters.

If you create it and run it in AdventureWorks on the ‘Production.Product’ table

exec jaten_diag_GetTableContents 'Production.Product', 5

… you will get these results

———————————

— Production.Product

— Row count : 504

——————————————————————

— Column [1] – ProductID —

count Percentage col_value

———– ——————————- ———–

1 0% 1

1 0% 2

1 0% 3

1 0% 4

1 0% 316(5 row(s) affected)

….

———————————

— Column [6] – Color —

count Percentage col_value

———– ——————————- —————

248 49% NULL

93 18% Black

43 8% Silver

38 7% Red

36 7% Yellow(5 row(s) affected)

….

Notice how the Color column reveals that almost half of the products do not have a color setting? This could imply relevancy or this data possibly has a problem being maintained. But also, notice how unique columns will obviously provide meaningless data.

The AdventureWorks database is a very clean database, so this example is a bit contrived, but in the real world, there are plenty of databases where this little procedure will allow you to get some insight into the data.

How do you get familiar with new data?

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

How To Guarantee Dependent JavaScript Files are Included

I’m very much a statically typed kind of programmer and knowing that missing code will not be found, until executed, and then only when it hits that missing code … well, lets just say it makes me … uneasy … actually, I’m nervous as heck every time I run it! I feel like my app is held together with duct tape! …. a house of cards waiting to collapse with the next gentle breeze.

When I started programming in JavaScript, this really bothered me. In addition to my ‘uneasy’ feeling, there was the constant aggravation of missing dependencies. This was more than uneasiness; this was an irritating thorn in my side. It was again only compounded by the fact, that if there were 5 missing dependencies, I would only find them one at a time, and only if I happened to be so luck as to covered it’s reference in my GUI testing!

Wouldn’t it be nice if my code would tell me on the first run ever dependent file that was missing?

I thought so; so I came up with this little trick to test if a JavaScript file is included already. Basically, you create a variable at the top of the JavaScript files which others depend on. I try to keep the name obvious, and weird enough to avoid collisions.

var include_ajax_utility = true;

Then in all the files which are dependent on this script (the ajax utility in this case), I add the following code to check.

//----------------------------------

// Check for dependencies

//----------------------------------

try

{

var fileName = "mypage.events.js";

if( "undefined" == typeof(include_ajax_utility))

alert( "JavaScript file '" + fileName

+ "' is missing dependent file"

+ " : ajax.utility.js");

}

catch(e)

{

alert( "Programmer Alert : At least one dependent”

+ “ file has not been included.");

}

Notice the code is checked to confirm the variable is set (in other words it exists), and if it doesn’t, an alert is displayed to the user indicating exactly which dependent file is missing. [As a side note, the try catch block doesn’t appear to be necessary, but still … I like to cover all my bases]

There are a couple drawbacks to doing this however; a) you may get multiple messages saying practically the same thing, and b) the JavaScript script tags need to be in order! Yes, the ordering of tags can be frustrating, if you insert a new dependency into existing code, only to have this break, and this is enough to make most people quickly abandon this idea. But for me, it’s a small price to pay for a giant step forward in having a sense of stability.

What do you think? A little too anal? 😉

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

How To Write Dynamic SQL AND Prevent SQL Injection Attacks

One of my pet peeves is when general rules are taken as gospel, and declared as the only acceptable practice regardless of the circumstance.

One of the big ones is Dynamic SQL. There’s a heck of a good reason for this, and it’s called an SQL Injection Attack, and if you are not familiar with it, I would strongly urge you to leave this post right now, and read up on it.

Anyway, Dynamic SQL is not inherently evil, it’s the appending of user entered text that is evil. Appending user entered text is just lazy and can be easily avoided with parameterization.

The trick is to create dynamic SQL with parameters.

Interestingly, I’ve never seen anybody else do this. I am constantly hearing people recommending stored procedures … even when are clearly not flexible enough to meet the required functionality. Don’t get me wrong, stored procedures have a lot of benefits, but flexibility isn’t one it’s popular for.

And now for some code …

I created a console app which queries the SQL Server sample database AdventureWorks. The following static method was added to the Program class.

public static int GetOrderCount(string productPrefix, SqlConnection cn)

{

// initialize SQL

string starterSql = "SELECT count(*) FROM Production.Product";

StringBuilder sbSql = new StringBuilder(starterSql);

// add parameters

if( !String.IsNullOrEmpty(productPrefix))

sbSql.Append( " where [name] like @namePrefix");

// initialize the command

SqlCommand cmd = new SqlCommand(sbSql.ToString(), cn);

if (cmd.CommandText.Contains("@namePrefix"))

cmd.Parameters.AddWithValue("@namePrefix", productPrefix + "%");

// get count

return Convert.ToInt32( cmd.ExecuteScalar());

}

Basically, the function queries the number of orders where the product name starts with a certain prefix.

The strength of doing this via dynamic SQL is we only need to filter on the product name when a valid prefix parameter is passed in. So, if the optional parameter (productPrefix) exists and is valid, the filter condition is added to the SQL and the parameter is added to the SqlCommand object.

In this overly simplified example, we could manage the same thing by just setting the productPrefix variable to the ‘%’ wild card, but then we’d be doing a filter for nothing. Not to mention things might be a little more difficult if the operator were ‘equals’ instead of ‘like’, or if there were multiple optional parameters. Creating SQL dynamically means we don’t need to write some funky kludge and our SQL is always nice, simple, and doing minimal work.

To execute my function, I added the following code to the Main(…) method.

// get total count

Console.WriteLine( "There are {0} products in total.",

Program.GetOrderCount( null, cn));

// get totals for various prefixes

string[] prefixes = new string[6] { "a", "b", "c",

"'; drop table Production.Product;--",

"d", "e" };

foreach(string prefix in prefixes)

{

Console.WriteLine("There are {0} products"

+ " prefixed with '{1}'.",

Program.GetOrderCount(prefix, cn), prefix);

}

First we call GetOrderCount(…) without a name prefix to test it without the parameter, then we traverse the array of possible prefixes (this would be the user entered data in a real app). Notice the fourth item? Pretty menacing eh? Don’t worry, it’s safe.

Here are the results

There are 504 products in total.

There are 3 products prefixed with ‘a’.

There are 4 products prefixed with ‘b’.

There are 12 products prefixed with ‘c’.

There are 0 products prefixed with ”; drop table Production.Product;–‘.

There are 3 products prefixed with ‘d’.

There are 9 products prefixed with ‘e’.

Notice the ‘d’ and ‘e’ prefixes were searched, and items found, proving the ‘drop table’ statement was not injected into the command.

You’d be surprised how much I use this. Many of my objects have a static GetList(…) method, and this method usually has multiple overloads. Keeping with the DRY principle, I prefer to keep all my logic in one place, so this method will usually have one overload with every possible filter parameter, and all the other overloads will just call this one. Surprisingly, the overload with the code, is not overly complex, and is actually pretty simple.

What do you think? Will you use parameterized dynamic sql in the future?

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

My name is John MacIntyre.

My name is John MacIntyre.