My Clean Code Experience No. 1 (with before and after code examples)

Public Code Review

Robert C. Martin was kind enough to review the code in this post at on his new blog Clean Coder. Be sure to read his review when you finish reading this post.

Introduction

After expressing an interest in reading Robert C Martin‘s books, one of my Twitter followers was kind enough to give me a copy of Uncle Bob’s book Clean Code as a gift*. This post is about my first refactoring experience after reading it and the code resulting from my first Clean Code refactor.

Sample code

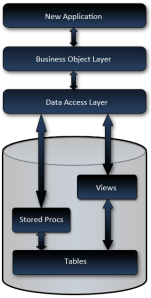

The code used in this post is based on the data access layer (DAL) used in a side project I’m currently working on. Specifically, my sample project is based on a refactor on the DAL classes for comment data. The CommentData class and surrounding code was simplified for the example, in order to focus on the DAL’s refactoring, rather than the comment functionality. Of course; the comment class could be anything.

Download the my clean code refactor sample project (VS2008)

Please notice:

1. The database can be generated from the script in the SQL folder

2. This code will probably make the most sense if you step through it

3. This blog post is about 1,700 words, so if you aren’t into reading, you will still get the jist of what I’m saying just from examining the source code.

What Clean Code isn’t about

Before starting, I want to point out that Clean Code is not about formatting style. While we all have our curly brace positioning preferences, it really is irrelevant. Clean Code strikes at a much deeper level, and although your ‘style’ will be affected tremendously, you won’t find much about formatting style.

My original code

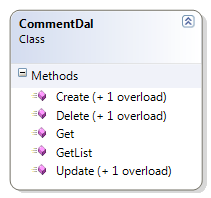

My original comment DAL class is in the folder called Dirty.Dal, and contains one file called CommentDal.cs containing the CommentDal class. This class is very typical of how I wrote code before reading this book**.

The original CommentDal class is 295 lines of code all together and has a handful of well named methods. Now, 295 lines of code is hardly awful, it doesn’t seem very complex relatively speaking, and really, we’ve all seen (and coded) worse. Although the class interface does seem pretty simple, the simplicity of its class diagram hides its code complexity.

public static void Create(IEnumerable<CommentData> Comments, SqlConnection cn)

{

// validate params

if (null == cn) throw new ArgumentNullException("cn");

if (cn.State != ConnectionState.Open) throw new ArgumentException("Invalid parameter: connection is not open.", "cn");

if (null == Comments) throw new ArgumentNullException("Comments");

foreach (CommentData data in Comments)

{

if (data.CommentId.HasValue)

throw new ArgumentNullException("Create is only for saving new data. Call save for existing data.", "data");

}

// prepare command

using (SqlCommand cmd = cn.CreateCommand())

{

cmd.CommandText = "ins_comment";

cmd.CommandType = CommandType.StoredProcedure;

// add parameters

SqlParameter param = cmd.Parameters.Add("@comment_id", SqlDbType.Int);

param.Direction = ParameterDirection.Output;

cmd.Parameters.Add("@comment", SqlDbType.NVarChar, 50);

cmd.Parameters.Add("@commentor_id", SqlDbType.Int);

// prepare and execute

cmd.Prepare();

// update each item

foreach (CommentData data in Comments)

{

try

{

// set parameter

cmd.Parameters["@comment"].SetFromNullOrEmptyString(data.Comment);

cmd.Parameters["@commentor_id"].SetFromNullableValue(data.CommentorId);

// save it

cmd.ExecuteNonQuery();

// update the new comment id

data.CommentId = Convert.ToInt32( cmd.Parameters["@comment_id"].Value);

}

catch (Exception ex)

{

string msg = string.Format("Error creating Comment '{0}'", data);

throw new Exception(msg, ex);

}

}

}

}

This method can be simplified dramatically into a more readable style with fewer control statements.

But first, notice how the methods are segmented into line groupings which are similar, with each grouping isolated with a single line of white space both before & after it, plus a comment to prefix most groupings. Each of these groupings is a smell, indicating each should be its own method.

Before reading Clean Code, this was clean to me … this was beautiful code to me.

My new ‘clean’ code

I’ve got a feeling I missed a lot in this book and will probably end up rereading it several times, but the biggest takeaways from reading it in my first pass were:

Smaller well named classes & methods are easier to maintain and read. You may notice in the Clean.Dal directory, the classes are smaller, with file sizes hovering around the 50 LOC mark. 50 LOC for an entire class, when in the past, only my smallest methods would be less than 50 LOC. I’ve now realized; no code grouping is too small to separate into its own property, method, or even class***. Sometimes it’s wise to refactor a single expression into a property just to label it****.

Here is the equivalent of my new Create method:

public static void Execute(IEnumerable<CommentData> comments, int userId, SqlConnection cn)

{

ThrowExceptionIfExecuteMethodCommentsParameterIsInvalid(comments);

using (CommentInsertCommand insCmd = new CommentInsertCommand(cn))

{

foreach (CommentData data in comments)

data.CommentId = insCmd.Execute(data, userId);

}

}

From the above code, you may notice not only how much smaller and simpler the ‘Create’ method has become, but also that its functionality has been moved from a method to its own smaller class. The smaller class is focused on its single task of creating a comment in the database and is therefore not only easier to maintain, but will only require maintaining when a very specific change in functionality is requested, which reduces the risk of introducing bugs.

The small class / property / method idea extends to moving multi-line code blocks following control statements into their own methods.

For example:

while(SomethingIsTrue())

{

blah1();

blah2();

blah3();

}

Is better written as

while (SomethingIsTrue())

BlahBlahBlah():

With the ‘while’s block moved into its own BlahBlahBlah() method. It almost makes you wonder if having braces follow a control statement is a code smell, doesn’t it? *****

Also, as part of the small well named methods idea, detailed function names make comments redundant & obsolete. I’ve come to recognize most comments are a code smell. Check this out; my colleague Simon Taylor reviewed my code while I was writing this, pointed out that although my dynamic SQL was safe, colleagues following me may not see the distinction of what was safe, and may add user entered input into the dynamic SQL. He suggested a comment for clarification.

He was absolutely right, but instead of adding a comment, I separated it into its own method, which I believe makes things very clear. See below:

protected override string SqlStatement

{

get

{

return GenerateSqlStatementFromHardCodedValuesAndSafeDataTypes();

}

}

protected string GenerateSqlStatementFromHardCodedValuesAndSafeDataTypes()

{

StringBuilder sb = new StringBuilder(1024);

sb.AppendFormat(@"select comment_id,

comment,

commentor_id

from {0} ",

TableName);

sb.AppendFormat("where Comment_id={0} ", Filter.Value);

return sb.ToString();

}

Not only is this less likely to go stale, it will also clearly identify exactly what is going on both at the method declaration and everywhere it is called.

Moving control flow to the polymorphic structure is another technique to achieve clean code. Notice the ‘if’s in the ‘Clean.Dal’ version are pretty much reserved for parameter validation. I’ve come to recognize ‘if’s, especially when they deal with a passed in Boolean or Enum typed method parameters as a very distinct code smell, which suggests a derived class may be more appropriate.

Reusable base classes are also a valuable by-product of writing clean code. A reusable class library is not the goal in itself, in an anti-YAGNI kind of way, but is instead a natural side effect of organizing your code properly.

Clean code is also very DRY. There is very little if any duplicated code.

The structure is 100% based on the working, existing, code, and not on some perceived structure based on real world domain is-a relationships which don’t always match up perfectly.

One more thing, unfortunately not everything is going to fit nicely into the object oriented structure, and sometimes a helper class or extension method will be the best alternative. See the CommentDataReaderHelper class as an example.

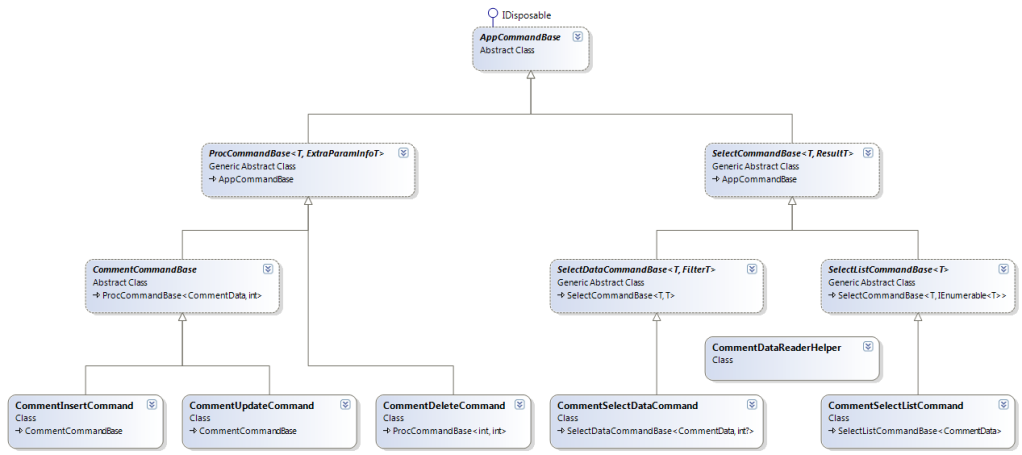

A closer look

Here’s a quick overview of class hierarchy, we’ll use CommentUpdateCommand as an example:

One of the first things you may notice about this class, is there only one entry point; the static method Execute(). This provides a very simple and obvious way to use the class. You can place a breakpoint in this method and step through the class and its hierarchy to fully understand it.

The class hierarchy is based completely on existing working code and was designed to share functionality effectively without duplicating code. Each class is abstracted to the point it needs to be, and no more, yet, the duties of each class in the hierarchy is crystal clear as if it was designed from the beginning to look like this.

Here are the classes,

The AppCommandBase class manages an SqlCommand object.

The ProcCommandBase class executes a given stored procedure.

The CommentCommandBase class shares functionality specific to the Comment table

The CommentUpdateCommand class implements functionality specific to the comment update stored procedure and hosts the static entry point method ‘Execute’.

The cost of writing Clean Code

When I started writing this, I didn’t know there were any downsides to writing clean code, but once I began this post, and started gathering evidence to prove how awesome the clean code strategy was, some evidence ruled against my initial euphoria. Here is a list of observations which could be looked upon unfavorably.

Increased LOC – This was really pronounced in a later experience (Clean Code Experience No. 2 coming soon), but I actually thought the LOC was decreased in this sample. At least once you take into account the reusable base classes … but it wasn’t. While I haven’t done an in depth analysis of the LOC, it appears that the code specific to the comments, before taking into account any abstract base classes, has a similar LOC count as the Dirty.Dal class. Now much of this is from structure code, like method declarations, but it was still disappointing.

Increased structural complexity – I suppose this should have been obvious, but it didn’t occur to me until writing this blog post, the complexity didn’t really disappear; it’s just been moved from the code to the polymorphic structure. However, having said that, I suspect, maintaining Clean Code with a vigorous polymorphic structure would be lower cost than a traditional code base.

Method call overhead – With all the classes, properties, and methods, it has occurred to me, that method call overhead may have a performance trade off. I asked Uncle Bob about this on Twitter, and his reply was “Method call overhead is significant in deep inner loops. Elsewhere it is meaningless. Always measure before you optimize.”. Pretty good advice I think.

But even after realizing the trade offs, Clean Code is still, clearly, the way to go.

In Summary

The Clean Code book has changed my outlook on how I write, and I think everyone should be exposed to this material. I’ve already re-gifted my Clean Code book, and the drive to write this blog post comes from a burning desire to share this information with my new colleagues.

I would love to hear your input on this blog post, my sample code, and the whole Clean Code movement. Please comment below.

Reminder

Don’t forget to read Uncle Bob’s review at on his new blog Clean Coder.

* Why did somebody I’ve never met send me a book as a give? Because he wanted me in the C.L.U.B. (Code Like Uncle Bob). And to answer your next question, yes I have since re-gifted the book.

** Very typical of how I wrote code before reading Clean Code … with the exception of some pretty nasty exception handling code. I removed it not due to its ugliness, but because its ugliness may have taken away from the code logic refactoring that I am trying to emphasize.

*** And when I say no code is too small, stay tuned for Clean Code Experience No 2, which was so unconventional, it left me questioning if I’d taken this concept way too far.

**** Although to be fair, I my first exposure to this idea was from Scott Hanselman after reading his first MVC book (this link is to his second MVC book).

***** Wrap your head around that, those of you who feel a single line following a control statement should have braces. LOL

Copyright © John MacIntyre 2010, All rights reserved

PS-Big thanks to Ben Alabaster to pushing me to write this post.

What’s wrong with the noun/adjective/verb object oriented design strategy

The programming course I took way back in 1993 was basically a 1 year intro to C. And like any good student, as I learned the language, I also started learning about code reuse and experienced a delightful satisfaction every time I realized 2 different functions had similar code which could be moved into a new function.

Eventually, I noticed a pattern, in that I would write a bit of functionality until it worked, then I would refactor it into a more elegant solution with as little repeated code as possible.

My code evolved quite nicely.

Then I learned C++, object oriented programming, and was introduced to the holy grail of object oriented design advice, which went something like this:

Take your requirements and circle all the nouns, those are your classes. Then underline all the adjectives, those are your properties. Then highlight all your verbs, those are your methods

This Noun / Adjective / Verb design strategy seemed like the most ingenious piece of programming wisdom ever spoken … but it’s led us down a misguided path.

It’s the verb that’s misunderstood. The verb should be another class, not a method. It should be a process class. As a programming concept, a process is just as much a ‘thing’ as any real world object. The verb should be a class, which accepts the noun as an input to be processed.

But there’s also another problem; Up until this little shortcut was articulated, code was structured based on the implemented code, with similar functionality refactored into its own reusable units, but once noun/adjective/verb idea became widespread, code was suddenly structured according to domain.

For example, the domain focus was really evident in the way we structured our ‘is-a’ relationships, with inheritance being based more on the real world domain, than the implementation code.

Inheritance should be based on most efficient code reuse, not the domain, because as anybody who has heard the square is not a rectangle* example can attest, sometimes the domain ‘is-a’ relationship just doesn’t work.

* With regards to the square is not a rectangle example, please be aware the solution outlined does not resolve the problem, as described in Uncle Bobs comment. The 1996(?) magazine article, The Liskov Substitution Principle is available, which contains the example as originally described. I didn’t post this url, since it’s not focused exclusively on the square / rectangle issue.

Copyright © John MacIntyre 2010, All rights reserved

An Abstract Data Model

This is post 3 from a 7 part series entitled Technical Achievements in my Last Project.

Overview

Overview

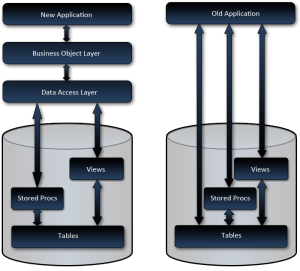

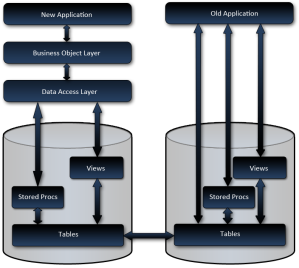

Normally, when I build a new system, I design the new data model based on the requirements, and build my business objects and data access, based primarily on a that data model*. The remainder of the application is built on the components beneath it, so when you change something at the bottom, like the data model, changes ripple throughout the application. The data model serves as the foundation of my application.

Now as far as this project goes, one of the important requirements was to deliver the new system incrementally, while leaving the older system to run in parallel until completely replaced.

Parallel Data Models

This presented a bit of a dilemma for me since the current database was … well … lacking, and I was planning to refactor it enough to make it a very unstable foundation for the old system. I wanted to refactor it for a number of reasons including; missing primary keys, no foreign keys, no constraints, data fields which were required but not there, data fields which were there but not used, data fields containing 2 or more pieces of information, and tables which should have been multiple tables. Not to mention the desire to achieve a consistent naming convention without the insane column names using characters like ‘/’ and ‘?’ … seriously.

However the parallel systems requirement caused a bit of a dilemma. I mean, how do you manage parallel systems, one of which needs a stable foundation, and the other is so temperamental that you don’t want to touch it.

My options as I saw them were something like:

My options as I saw them were something like:

- Scrap the data model refactoring.

This really didn’t get much thought. Well it did, but the thought was, is this the best route for the client? And if so, should I offer to help them find my replacement or just leave? I definitely wasn’t up for replacing one unmaintainable piece of junk for another. - New data model and re-factor the existing app.

The existing application was a total nightmare built in classic Access spaghetti code fashion. Just touching that looked like going down a rabbit hole of certain doom. - New application on the old data model and refactor the data model later.

This would have caused a real disconnect between the data model and the application. I’m not sure if the data model and application ever would have lined up properly. Not to mention the clients probable later decision of not completing that part of the project since everything worked. This seemed like a very bad idea. - Build a parallel data model for the new system, while leaving the old system as is.

From a development point of view, this seemed like the best alternative, but keeping an active database in sync presented a serious, possibly unconquerable, challenge.

The final option of refactoring the data model immediately and basing all construction on a solid foundation was definitely the most appealing. But how do we keep it in synch? I’m sure there are tools out there for that, but with a possibly dramatically different data model? With active live data? Even if there are tools, I doubt the price would have been within the project’s budget**. And if it did exist how would we bring concurrency issues back to the users who caused the conflict?

Abstract Data Model

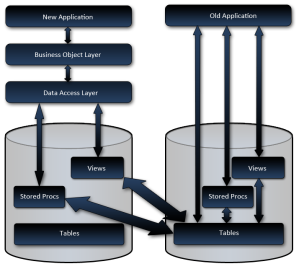

That’s when I had the idea; Why not just build an abstraction layer on the database? Why not manage the data all in one database while abstracting out the other data model? Why not build a simulated data model? Why not just redirect all my views and procs to the other database?

This was so bloody simple. Why hadn’t I ever heard of anybody else doing this before?

So the plan was to refactor the data model, build a concrete database, and instead of having stored procedures and views pointing to the tables like it was meant to, they would point to the tables in the other database. All changes would proceed as usual, for example; if the client had a change request which required a new field in a table, it would be added to the physical table, views and stored procedures would be updated, and the applications would change to accommodate. And when the old system was completely replaced, all that would need to be done, is to rewrite the DML to direct to the current system. Even the data transition would be easier since we’d already have views aggregating data in the expected format!

So the plan was to refactor the data model, build a concrete database, and instead of having stored procedures and views pointing to the tables like it was meant to, they would point to the tables in the other database. All changes would proceed as usual, for example; if the client had a change request which required a new field in a table, it would be added to the physical table, views and stored procedures would be updated, and the applications would change to accommodate. And when the old system was completely replaced, all that would need to be done, is to rewrite the DML to direct to the current system. Even the data transition would be easier since we’d already have views aggregating data in the expected format!

I was pretty excited about this when I designed it and told a few developer friends, who thought it was either stupid idea, problem ridden, or pointless at best. Now I do have a lot of stupid and pointless ideas, but didn’t feel like this was one of them.

Implementation Challenges

So how did I implement it?

Well once the new data model was finished, I wrote the views and stored procedures, as you might expect, but at this point you run into the following challenges:

- Required data missing from the existing database

For example; A create date for products so business knows when a product was added to the system. - Existing data in old system requires new values.

For example; An order has a boolean status field for ‘pending’ & ‘completed’, but business requires status’s to be changed to ‘pending’, ‘ordered’, ‘shipped’ - Non-existing data tables need to be simulated

For example; Lets say business wants the user to be able to request product literature on the order with regular products, you’ll need to simulate orders for product literature ordered via the old system.

The non-existing data tables were easily simulated with a view. However, these often came with a performance penalty. This is one of the few cases where the new application needed minor modifications to get around. Basically, different views were created for different situations, and the data access component would select the most appropriate view based on the circumstance.

Extension Tables

The missing required data and data changes (like status codes) were handled with extension tables.

So if I had a table named ‘order’ for example, I would create a new table called ‘order_x’, with a matching primary key column, plus columns for data that was required but missing, and data which required changing. Then insert, update, and delete triggers would be added to the ‘order’ table so changes from the old system would keep the extension table up to date. And procs and views on the new system would join the 2 tables to represent it as a cohesive unit.

If the current fields required value changes and/or new values, the new values would be stored in a field in the extension tables, and the update trigger on the main table would update the status when it changed from the old system. In situations where the data did not synch up 1 to 1, certain column mapping rules would be used. To extend on the order status example; ‘pending’ in the old system is the same as ‘pending’ in the new system, but what about ‘completed’? Is that ‘ordered’ or ‘shipped’? It might be mapped so if the old system updates the order to ‘completed’, it would change the extension table to ‘ordered’, and if the new system updated the status to either ‘ordered’ or ‘shipped’, the ‘order’ table status would be updated to ‘completed’.

The Dirty Data Problem

But the biggest problem was dirty data. This was a killer! This is the one challenge which plagued us throughout the entire project and knocked us off our schedule continuously. Because the old system was still being used, which offered the users absolutely no restrictions; we were getting situations which never could have been predicted. This was causing the application to act in unexpected ways, and even after making changes to accommodate the dirty data, we received endless support inquiries on unexpected behavior caused by null data and unexpected values.

There were changes to the application based on this as well. We actually had to change our business objects to set default enum values and make most properties nullable types, even though in the new data model, they were not nullable. This doesn’t effect input, but anywhere that data was being read from the database, we had to accommodate it. These nullable types will not require changing when the old system is completely replaced, but they do represent a smell which I hope somebody will eventually eliminate.

Conclusion

Overall though, I’d say this strategy was an overwhelming success. Other than the dirty data issue, which still rears it’s head every now and again, there have been no problems since it was first deployed.

If you can get away from a parallel deployment, I would recommend doing so, but if you can’t, I really think this strategy is a good one.

EDIT: After I posted this, it occurred to me that this strategy really cost almost nothing, since the biggest costs were in the setting up the views to extract the data out of the system in the expected format, which would have needed to be done when the data was moved to the new system anyway. The only real extra work was the extension tables and abstract procs, neither of which were very difficult once the mapping was established in the views. My colleague Ben Alabaster also pointed out that even if we bought an overpriced synch tool; configuration of the tool would have taken longer to setup than my solution.

This is post 3 from a 7 part series entitled Technical Achievements in my Last Project.

Credit-Thank you Ben Alabaster for the illustrations.

* I need a pretty good reason to build a data model and object model that are different. I’ve have done it, but its rare to have a compelling enough reason.

** At the time I wasn’t aware of any tools to do this. Karen Lopez was kind enough to let me know that TIBCO & Informatica may have done the job, but are expensive. From what I can tell, these tools would have been more expensive than the strategy I implemented. Thanks Karen.

Copyright © John MacIntyre 2010, All rights reserved

How to enforce a foreign key constraint against multiple tables

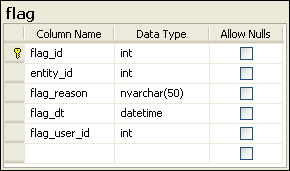

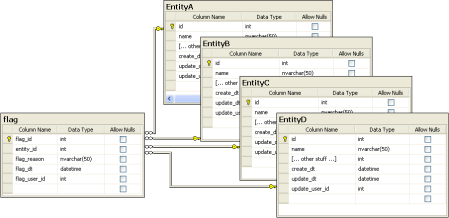

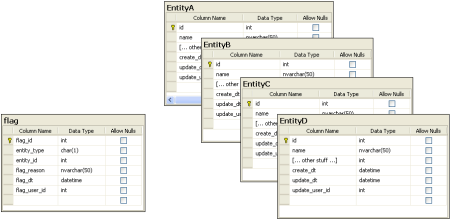

I am building a web app with Ben Alabaster, and one of the requirements is for the user to be able to flag items for moderators. So the user can flag entity A, entity B, entity C, etc…

So I created a single flag table.

Which I then tried to tie it to the entity tables, hoping for something like

Where all the foreign key relationships were from [flag].[entity_id] to [EntityX].[id]

Then when I wanted the top 10 flags from a particular entity (B in this case), I could run a query like

select top 20 e.[name], count(*) "count"

from entityB as e

left join flag as f

on f.entity_id = e.id

where f.entity_type='B'

group by e.[name]

order by count(*) descUnfortunately, if you were to create the above table relationship, and run the following inserts

insert into EntityA( id, name) values (1, 'EntityA');

insert into EntityB( id, name) values (2, 'EntityB');

insert into EntityC( id, name) values (3, 'EntityC');

insert into EntityD( id, name) values (4, 'EntityD');The following statement

insert into flag(entity_id, flag_reason) values(5, 'Testing without a valid FK value.');would fail as expected, as expected, with the following error. “The INSERT statement conflicted with the FOREIGN KEY constraint “FK_flag_EntityA”. The conflict occurred in database “test”, table “dbo.EntityA”, column ‘id’.”

But

insert into flag(entity_id, flag_reason) values(1, 'Testing the FK to entity A.');would also fail, which was undesired, with the following error: “The INSERT statement conflicted with the FOREIGN KEY constraint “FK_flag_EntityB”. The conflict occurred in database “test”, table “dbo.EntityB”, column ‘id’.” +

So, my options with regards to referential integrity are :

- Ditch the referential integrity, which I am vehemently opposed to. ++

- Create multiple flag tables, each with the exact same schema, but a different Foreign Key relationship, which just seems wrong.

- Managing referential integrity via triggers.

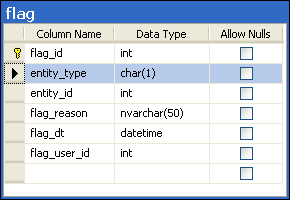

While I’m not a big fan of triggers, the ‘Managing referential integrity via triggers.’ option seems like the only tolerable one. So I added the [entity_type] column to my flag table.

Removed the relationships

And wrote the following trigger to manage the foreign key relationship.

-- =============================================

-- Description: maintain referential integrity on

-- a column which is a FK for different tables

-- =============================================

CREATE TRIGGER flag_entity_id_fk

ON flag

AFTER INSERT,UPDATE

AS

BEGIN

declare @entity_type char(1);

declare @entity_id int;

declare @cnt int;

-- SET NOCOUNT ON added to prevent extra result sets from

-- interfering with SELECT statements.

SET NOCOUNT ON;

-- get info

select @entity_type=entity_type,

@entity_id=entity_id,

@cnt=0

from inserted;

-- check if records exist

if 'A' = @entity_type

begin

select @cnt=count(*)

from entityA

where id=@entity_id;

end

else if 'B' = @entity_type

begin

select @cnt=count(*)

from entityB

where id=@entity_id;

end

else if 'C' = @entity_type

begin

select @cnt=count(*)

from entityC

where id=@entity_id;

end

else if 'D' = @entity_type

begin

select @cnt=count(*)

from entityD

where id=@entity_id;

end

-- records exist? exit

if 0 < @cnt

begin

return;

end

-- no? error

raiserror( 'Unable to find foriegn key match on entity type ''%s'', id ''%d''.', 16, 1, @entity_type, @entity_id);

rollback transaction;

END

Now, when you run

insert into flag(entity_type, entity_id, flag_reason) values('B', 5, 'Testing without a valid FK value.');The trigger doesn’t find a match in the appropriate table, rolls back the insert, and gives you a descriptive error message.

Unable to find foriegn key match on entity type ‘B’, id ‘5’.

However, a good value is accepted.

insert into flag(entity_type, entity_id, flag_reason) values('B', 2, 'Testing without a valid FK value.');I’m still not happy with this approach, but it does seem to be the lesser of all the evils. Please let me know with a comment if there is another option I’ve overlooked. Thanks.

* Frankly I was surprised it even compiled.

+ Unless of course you were unfortunate enough to test this in a coincidental situation where all tables happened to contain the id of every test you ran.

++ Yes ‘vehemently’

EDIT (11/10/2009) : It just occurred to me that this article does not take into account what would happen if the entity tables were to delete a row which this table was pointing to. When I designed my tables this was taken into account, but since we are not planning to allow actual deletions, it was left out. However, if you were to implement this strategy, where entities could be deleted, a delete trigger would need to be created for each entity table.

How to eliminate analysis paralysis

Over the first decade of my programming career, one trend became very obvious to me. I noticed that I could always increase my efficiency dramatically in the 11th hour before a deadline. It took a long time to see (like a decade), but I finally saw the truth…

I now know:

- Without the deadline; I had Analysis Paralysis

- Analysis Paralysis is caused by fear

- Analysis Paralysis is specifically caused by the fear of making decisions

In the 11th hour before a deadline, I made decisions immediately, where as without the deadline, I’d ponder endlessly. Once I realized this, it was very easy to fix; Get all the information, give myself a time limit (1-5 minutes), make a decision, and start.

This was an incredible productivity boost!

Here’s how I streamlined my own personal development process to ‘get all the information’:

- I list all my options for each design decision

- I pick the best option(s) based on pros/cons (may be more than one)

- I list the risks of the best option(s)

- Then for each risk, I design & write a ‘conclusive’ proof of concept

- If the proof of concepts prove it will NOT work, then I toss the idea, pick another one & repeat.

A few things to keep in mind:

- A ‘Proof Of Concept’ is a minimal app to prove something. (mine are usually 1-6hrs)

- If 2 or more options are equal, I give myself a time limit (1-5 minutes) and make a decision … any decision, and don’t look back.

- Trust yourself to be able to deal with any problems you hit which were not take into account at design time.

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

My name is John MacIntyre.

My name is John MacIntyre.