My Clean Code Experience No. 1 (with before and after code examples)

Public Code Review

Robert C. Martin was kind enough to review the code in this post at on his new blog Clean Coder. Be sure to read his review when you finish reading this post.

Introduction

After expressing an interest in reading Robert C Martin‘s books, one of my Twitter followers was kind enough to give me a copy of Uncle Bob’s book Clean Code as a gift*. This post is about my first refactoring experience after reading it and the code resulting from my first Clean Code refactor.

Sample code

The code used in this post is based on the data access layer (DAL) used in a side project I’m currently working on. Specifically, my sample project is based on a refactor on the DAL classes for comment data. The CommentData class and surrounding code was simplified for the example, in order to focus on the DAL’s refactoring, rather than the comment functionality. Of course; the comment class could be anything.

Download the my clean code refactor sample project (VS2008)

Please notice:

1. The database can be generated from the script in the SQL folder

2. This code will probably make the most sense if you step through it

3. This blog post is about 1,700 words, so if you aren’t into reading, you will still get the jist of what I’m saying just from examining the source code.

What Clean Code isn’t about

Before starting, I want to point out that Clean Code is not about formatting style. While we all have our curly brace positioning preferences, it really is irrelevant. Clean Code strikes at a much deeper level, and although your ‘style’ will be affected tremendously, you won’t find much about formatting style.

My original code

My original comment DAL class is in the folder called Dirty.Dal, and contains one file called CommentDal.cs containing the CommentDal class. This class is very typical of how I wrote code before reading this book**.

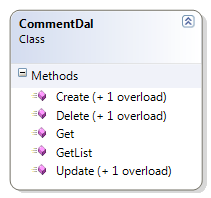

The original CommentDal class is 295 lines of code all together and has a handful of well named methods. Now, 295 lines of code is hardly awful, it doesn’t seem very complex relatively speaking, and really, we’ve all seen (and coded) worse. Although the class interface does seem pretty simple, the simplicity of its class diagram hides its code complexity.

public static void Create(IEnumerable<CommentData> Comments, SqlConnection cn)

{

// validate params

if (null == cn) throw new ArgumentNullException("cn");

if (cn.State != ConnectionState.Open) throw new ArgumentException("Invalid parameter: connection is not open.", "cn");

if (null == Comments) throw new ArgumentNullException("Comments");

foreach (CommentData data in Comments)

{

if (data.CommentId.HasValue)

throw new ArgumentNullException("Create is only for saving new data. Call save for existing data.", "data");

}

// prepare command

using (SqlCommand cmd = cn.CreateCommand())

{

cmd.CommandText = "ins_comment";

cmd.CommandType = CommandType.StoredProcedure;

// add parameters

SqlParameter param = cmd.Parameters.Add("@comment_id", SqlDbType.Int);

param.Direction = ParameterDirection.Output;

cmd.Parameters.Add("@comment", SqlDbType.NVarChar, 50);

cmd.Parameters.Add("@commentor_id", SqlDbType.Int);

// prepare and execute

cmd.Prepare();

// update each item

foreach (CommentData data in Comments)

{

try

{

// set parameter

cmd.Parameters["@comment"].SetFromNullOrEmptyString(data.Comment);

cmd.Parameters["@commentor_id"].SetFromNullableValue(data.CommentorId);

// save it

cmd.ExecuteNonQuery();

// update the new comment id

data.CommentId = Convert.ToInt32( cmd.Parameters["@comment_id"].Value);

}

catch (Exception ex)

{

string msg = string.Format("Error creating Comment '{0}'", data);

throw new Exception(msg, ex);

}

}

}

}

This method can be simplified dramatically into a more readable style with fewer control statements.

But first, notice how the methods are segmented into line groupings which are similar, with each grouping isolated with a single line of white space both before & after it, plus a comment to prefix most groupings. Each of these groupings is a smell, indicating each should be its own method.

Before reading Clean Code, this was clean to me … this was beautiful code to me.

My new ‘clean’ code

I’ve got a feeling I missed a lot in this book and will probably end up rereading it several times, but the biggest takeaways from reading it in my first pass were:

Smaller well named classes & methods are easier to maintain and read. You may notice in the Clean.Dal directory, the classes are smaller, with file sizes hovering around the 50 LOC mark. 50 LOC for an entire class, when in the past, only my smallest methods would be less than 50 LOC. I’ve now realized; no code grouping is too small to separate into its own property, method, or even class***. Sometimes it’s wise to refactor a single expression into a property just to label it****.

Here is the equivalent of my new Create method:

public static void Execute(IEnumerable<CommentData> comments, int userId, SqlConnection cn)

{

ThrowExceptionIfExecuteMethodCommentsParameterIsInvalid(comments);

using (CommentInsertCommand insCmd = new CommentInsertCommand(cn))

{

foreach (CommentData data in comments)

data.CommentId = insCmd.Execute(data, userId);

}

}

From the above code, you may notice not only how much smaller and simpler the ‘Create’ method has become, but also that its functionality has been moved from a method to its own smaller class. The smaller class is focused on its single task of creating a comment in the database and is therefore not only easier to maintain, but will only require maintaining when a very specific change in functionality is requested, which reduces the risk of introducing bugs.

The small class / property / method idea extends to moving multi-line code blocks following control statements into their own methods.

For example:

while(SomethingIsTrue())

{

blah1();

blah2();

blah3();

}

Is better written as

while (SomethingIsTrue())

BlahBlahBlah():

With the ‘while’s block moved into its own BlahBlahBlah() method. It almost makes you wonder if having braces follow a control statement is a code smell, doesn’t it? *****

Also, as part of the small well named methods idea, detailed function names make comments redundant & obsolete. I’ve come to recognize most comments are a code smell. Check this out; my colleague Simon Taylor reviewed my code while I was writing this, pointed out that although my dynamic SQL was safe, colleagues following me may not see the distinction of what was safe, and may add user entered input into the dynamic SQL. He suggested a comment for clarification.

He was absolutely right, but instead of adding a comment, I separated it into its own method, which I believe makes things very clear. See below:

protected override string SqlStatement

{

get

{

return GenerateSqlStatementFromHardCodedValuesAndSafeDataTypes();

}

}

protected string GenerateSqlStatementFromHardCodedValuesAndSafeDataTypes()

{

StringBuilder sb = new StringBuilder(1024);

sb.AppendFormat(@"select comment_id,

comment,

commentor_id

from {0} ",

TableName);

sb.AppendFormat("where Comment_id={0} ", Filter.Value);

return sb.ToString();

}

Not only is this less likely to go stale, it will also clearly identify exactly what is going on both at the method declaration and everywhere it is called.

Moving control flow to the polymorphic structure is another technique to achieve clean code. Notice the ‘if’s in the ‘Clean.Dal’ version are pretty much reserved for parameter validation. I’ve come to recognize ‘if’s, especially when they deal with a passed in Boolean or Enum typed method parameters as a very distinct code smell, which suggests a derived class may be more appropriate.

Reusable base classes are also a valuable by-product of writing clean code. A reusable class library is not the goal in itself, in an anti-YAGNI kind of way, but is instead a natural side effect of organizing your code properly.

Clean code is also very DRY. There is very little if any duplicated code.

The structure is 100% based on the working, existing, code, and not on some perceived structure based on real world domain is-a relationships which don’t always match up perfectly.

One more thing, unfortunately not everything is going to fit nicely into the object oriented structure, and sometimes a helper class or extension method will be the best alternative. See the CommentDataReaderHelper class as an example.

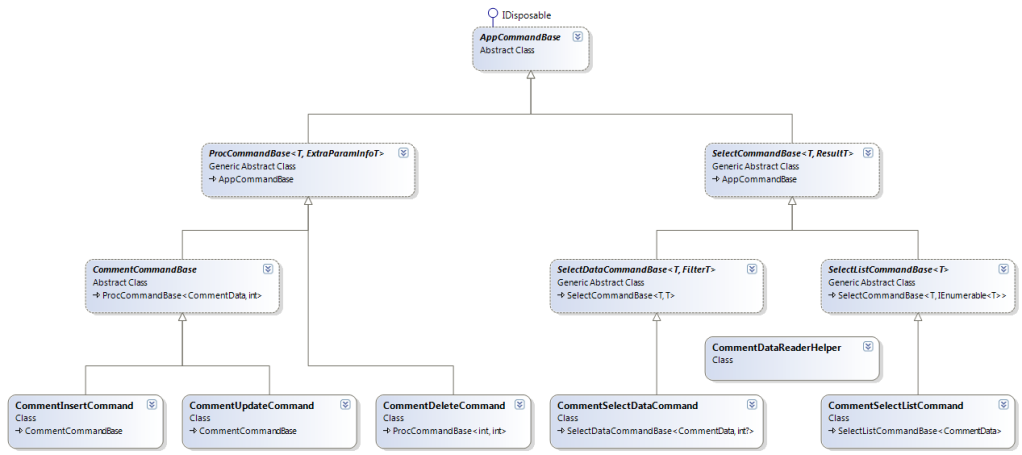

A closer look

Here’s a quick overview of class hierarchy, we’ll use CommentUpdateCommand as an example:

One of the first things you may notice about this class, is there only one entry point; the static method Execute(). This provides a very simple and obvious way to use the class. You can place a breakpoint in this method and step through the class and its hierarchy to fully understand it.

The class hierarchy is based completely on existing working code and was designed to share functionality effectively without duplicating code. Each class is abstracted to the point it needs to be, and no more, yet, the duties of each class in the hierarchy is crystal clear as if it was designed from the beginning to look like this.

Here are the classes,

The AppCommandBase class manages an SqlCommand object.

The ProcCommandBase class executes a given stored procedure.

The CommentCommandBase class shares functionality specific to the Comment table

The CommentUpdateCommand class implements functionality specific to the comment update stored procedure and hosts the static entry point method ‘Execute’.

The cost of writing Clean Code

When I started writing this, I didn’t know there were any downsides to writing clean code, but once I began this post, and started gathering evidence to prove how awesome the clean code strategy was, some evidence ruled against my initial euphoria. Here is a list of observations which could be looked upon unfavorably.

Increased LOC – This was really pronounced in a later experience (Clean Code Experience No. 2 coming soon), but I actually thought the LOC was decreased in this sample. At least once you take into account the reusable base classes … but it wasn’t. While I haven’t done an in depth analysis of the LOC, it appears that the code specific to the comments, before taking into account any abstract base classes, has a similar LOC count as the Dirty.Dal class. Now much of this is from structure code, like method declarations, but it was still disappointing.

Increased structural complexity – I suppose this should have been obvious, but it didn’t occur to me until writing this blog post, the complexity didn’t really disappear; it’s just been moved from the code to the polymorphic structure. However, having said that, I suspect, maintaining Clean Code with a vigorous polymorphic structure would be lower cost than a traditional code base.

Method call overhead – With all the classes, properties, and methods, it has occurred to me, that method call overhead may have a performance trade off. I asked Uncle Bob about this on Twitter, and his reply was “Method call overhead is significant in deep inner loops. Elsewhere it is meaningless. Always measure before you optimize.”. Pretty good advice I think.

But even after realizing the trade offs, Clean Code is still, clearly, the way to go.

In Summary

The Clean Code book has changed my outlook on how I write, and I think everyone should be exposed to this material. I’ve already re-gifted my Clean Code book, and the drive to write this blog post comes from a burning desire to share this information with my new colleagues.

I would love to hear your input on this blog post, my sample code, and the whole Clean Code movement. Please comment below.

Reminder

Don’t forget to read Uncle Bob’s review at on his new blog Clean Coder.

* Why did somebody I’ve never met send me a book as a give? Because he wanted me in the C.L.U.B. (Code Like Uncle Bob). And to answer your next question, yes I have since re-gifted the book.

** Very typical of how I wrote code before reading Clean Code … with the exception of some pretty nasty exception handling code. I removed it not due to its ugliness, but because its ugliness may have taken away from the code logic refactoring that I am trying to emphasize.

*** And when I say no code is too small, stay tuned for Clean Code Experience No 2, which was so unconventional, it left me questioning if I’d taken this concept way too far.

**** Although to be fair, I my first exposure to this idea was from Scott Hanselman after reading his first MVC book (this link is to his second MVC book).

***** Wrap your head around that, those of you who feel a single line following a control statement should have braces. LOL

Copyright © John MacIntyre 2010, All rights reserved

PS-Big thanks to Ben Alabaster to pushing me to write this post.

Large Application Estimation in 2 Weeks

This is post 2 from a 7 part series entitled Technical Achievements in my Last Project.

My role in this project started out by being asked to assess the existing project, provide insight into options to move it forward, with one of those options being a rewrite*. An estimation was needed for the rewrite option, so I was given 2 weeks to do it.

This post explains how I was able to pull off this massive estimation undertaking in a mere 2 weeks.

Ideally, the project documentation from the existing system could be used to give an excellent estimate, but this is a blog post, not a fairly tale. Or a thorough specification could have derived from an in depth analysis of the existing application, which business could have adjusted as needed, and used to conclude a reasonable estimate. But this is the real world, and this is a real business; and I was given a real (short) deadline.

Now I should also mention this wasn’t a 20 KLOC project, it was a fairly complex piece of software with over 500 KLOC** and almost 1800 database objects along with satellite applications. Everybody understood how this short timeframe severely limited the accuracy of anything I would be able to provide, but I was determined do the best job possible.

So my next goal was to figure out how to do a somewhat accurate estimate, provided the constraints, where I wouldn’t be setting myself up for a lynching at the end of it. I explored many different ways to get a rough idea about the entire projects scope.

This is what I finally settled on:

- Dumped all Microsoft Access Objects

First I modified an Access VBA script I found for exporting objects to text files and exported everything. - Dumped all database DDL

I wrote a little command line utility to loop through a SQL Server database, pull the DDL for each object using the sp_helptext stored procedure, and write it out to text files. - Created an analysis database

Created an analysis database primarily comprised of three tables; one for all the entities the application is comprised of, a second for linking which entity called which, and the third for linking menu items to all dependent forms. - Collected the names of all objects into the database

I wrote another little command line utility to read each code file dumped out in steps 1 & 2, and add the objects name and a few other statistics.*** - Determined all entity relationships

I wrote another command line utility which traversed each code file, reading in the code, and determining which of the known entities it was dependent upon. This information was stored in the second linking table in the analysis database. - Determined dependencies of each menu item

Wrote yet another command line application to traverse the dependencies to determine which menu item could eventually load which forms. Certain forms were ignored in the calculation including, a) previously calculated forms (obviously), b) menu item starting point forms, and c) specific forms which could load almost every other form in the application. - Ball park estimated each GUI component

Loaded each of the nearly 400 forms and 200 reports, and did ball park estimations on each one, deriving what business logic I could glean from the UI. I used the CRUDLAFS estimation technique to ensure I didn’t miss any basic functionality.

Other than trying to figure out how I would do the estimation, this was the most time consuming task. Just think, even at a mere 3 minutes per form, we are still talking 30 hours of tedious effort. - Totaled the estimates

- Menu estimate breakdown

In order to determine the time to replace one complete menu item with all functionality from that starting point, I needed to sum the estimates from all dependencies from that form onward. So I queried the times for each menu item starting point, summing all dependency estimates and added it to my report.

Now there are some serious issues with this strategy, like the high probability for; missed complexity, missed functionality, and just overall inaccuracies, but these issues were known and pointed out at the time with the estimate.

Was the estimate a success? Was it accurate? Honestly, I’d say it was a success, but it didn’t turn out to be accurate.

…. Wait! What?

How could an estimate be a success if it wasn’t accurate?

Well, let me revise that by saying some of the core underlying assumptions were changed dramatically 5 months into the project which increased complexity far beyond the simplistic web design the estimate was based on.

The big lesson learned from this task wasn’t so much about estimation as it was about managing requirements and sign off. …. But I digress. 😉

Anyway, I think the estimation I performed was well grounded in something, even if that something was not as thoroughly researched as would be ideal. I believe the executed strategy had a good return on investment.

This is post 2 from a 7 part series entitled Technical Achievements in my Last Project.

* For the record, I already had more consulting work than I could handle at the time, so while a rewrite was interesting, steering the client into an unwarranted rewrite was not beneficial for anybody.

** LOC sizes include comments, white space, and database object DML.

*** The other statistics, LOC, etc.., was actually one of my false starts in how to do this analysis.

Copyright © John MacIntyre 2010, All rights reserved

Investigating the relationship between estimation accuracy and task size

Yesterday on StackOverflow Johannes Hansen asked

What is the acceptable upper limit of time allocated to a single development task?

I answered with

If you track your estimate/actual history, you can probably plot hours by accuracy and figure out exactly what number is appropriate for your team.

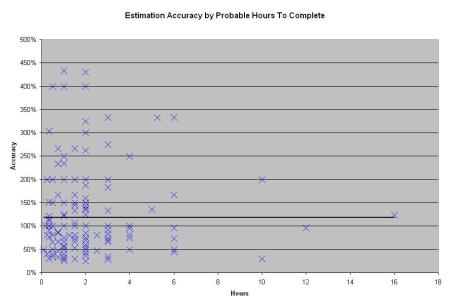

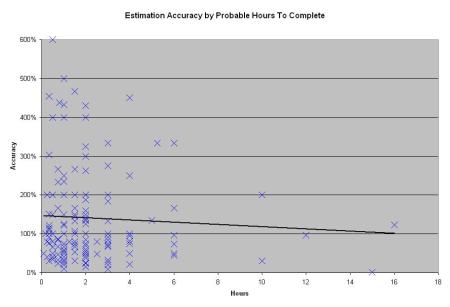

My advice sounded so good I thought I’d try it myself. So I opened bug tracker where I keep track of my probable and actual times and exported my closed bugs to Excel. I cleaned up a bit, by removing any rows with either a 0 probable or actual time, then created a chart.

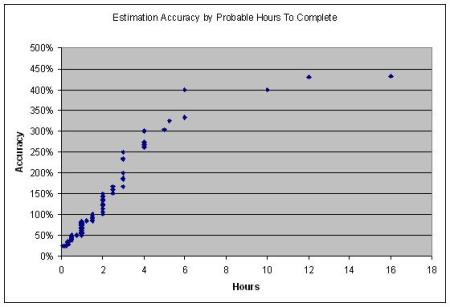

Now when I conceived of this idea, I was expecting something like

Well I wasn’t expecting the plots to be that dense, or to accelerate above 200% so fast, but let’s just say, that general look would have been pleasing to my eye.

Now, I’ve got to say, is NOT what I was expecting at all. You can kind of see a very dense block under 4 hours and 100%, but doesn’t tell us very much with regards to the relationship between estimation accuracy and size of the tasks. So, I then threw a Linear Regression Trendline on the chart hoping it would illuminate an ascending trend. Instead it contradicted my assumptions by declining, suggesting the larger the task, the more accurate I am … which isn’t true at all.

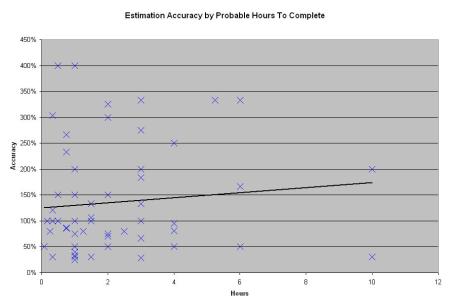

Maybe it’s the outliers. Maybe it’s the weird changes outside of normality causing it to look so horrible. So I sorted the data by the accuracy percentage, dropped the top and bottom 5 percent, redrew the chart and got this.

Still obvious relationship between the estimated task size and estimation accuracy. But at least my trendline is no longer declining. By flat lining, it’s now suggesting there is no relationship between estimation accuracy and task size.

… hmmm … bugs are included in my data. I wonder if that could be having an effect? I’ve been estimating approximate times bugs will take to resolve for my manager. Most of these bugs have been estimated before even investigating the cause, so that’s not really the same as estimating a defined task. What if I remove them?

I went back to my original data dump, removed all bugs, tickets, and questions so I was left with only new tasks and changes. I again removed the bottom & top 5% and recharted.

Well, I’ve finally got an ascending trendline suggesting my estimates are weaker as tasks get bigger, which is what we expected to happen.

Conclusion: I’m still not very happy with the scatter chart. I still believe it should look closer to my initial assumptions of what this chart should have looked like. This suggests to me that I need to take another look at my data collection if it’s going to be useful to me at all.

Feed back and constructive criticism welcome.

A very simple Pair Programming IP Rights Agreement

Once in 1998, I sat down with my manager (the only manager I’ve ever had who could program), and we banged out some code for about 2 days. It was a very fast paced synergistic activity where one idea fed another and at the end of 2 days our initial idea morphed into something completely different and a heck of a lot better.

Well, tonight 11 years later, I’ve convinced my colleague Ben Alabaster to come over and pair program. I don’t know how it will go, I’ve got high hopes, but I am confident at the end of the night both Ben and myself will be a little better as programmers, and might have even started something worth finishing.

But two things I do know: 1) if we come up with something good, we’re both going to want to use it. And 2) if we ever get to the point of needing an agreement outlining our IP rights, it will be too late to draft one. So, Ben & I threw together some basic rules yesterday. Frankly, I’m surprised I couldn’t find any on the net already, maybe I over think this stuff more than most people, or perhaps it’s because I just didn’t look that hard.

So here’s what we agreed to:

- Each of us, individually, is free to use any programming concept shared, discovered, or created.

- Each of us, individually, is free to use anything we cocreate as part of a larger project with a significant amount of additional functionality. This can be a personal project, business project, or consulting project.

- Each of us must agree to release any code or binaries either as a commercial product or open source. Each of us will share any credit and/or financial profits equally.

I’d love to hear other people’s perspective and comments about this.

Copyright © John MacIntyre 2009, All rights reserved

6 simple steps to a stress free database change deployment

A few years ago, I was contracted to work on an internal web application in a large Fortune 500 company. Our app was constantly in use, had thousands of users, was fairly active, and had a rather large database (one table had over 300 million records).

I was fortunate enough to work beside a DBA who had among other tasks, the responsibility of running deployment scripts against production. We talked occasionally, and he’d tell me about some of the deployment scripts he would run and the programmers he ran them for. He’d get programmers whose scripts never fail or report errors and would actually request the output text to confirm the DBA did their job. Then there were the programmers who would work against development, and when it was time to deploy, they’d attempt to remember all the subtle database changes made along the way and whack together an SQL script to repeat them. My friend was often rejecting scripts which were obviously never run before and sometimes he’d have to run expedited scripts, because the first was missing a necessary column or two.

Deployments can be a real headache at the best of times, but especially when schema updates to a production database are involved. Don’t get me wrong, you usually have a backup to fall back on, but how long will that take to restore? … Really, you don’t want to resort to the restore, have the database offline for that long, or have your name associated to it.

So gradually I evolved a process which has kept me sane and confident when deploying schema changes to production servers, even on large, sensitive, and active databases.

Here’s my simple 6 step process for deployment sanity. (FWIW-I’ve never called it my ‘6 step process…’ before writing this post. 😉

Step 1 – Create a central shared directory for all scripts

The first thing you do is store all database changes as scripts in a central shared directory.

I usually store the scripts in a directory structure like

\projects\ProjX\DeploymentScripts\dbname.production\

\projects\ProjX\DeploymentScripts\dbname.archive\deployed.YYMMDD\

Where the ‘dbname.production’ folder stores currently pending scripts. ‘dbname.archive’ stores previously deployed scripts each in their own dated subdirectory.

Step 2 – Create scripts for all changes

Any and all changes are scripted and stored. If you use a tool to adjust your database schema, then you export the generated SQL and run that exported SQL instead of running the tool directly against your database.

Keep scripts in order and don’t change old scripts. If … ok, when you make a mistake, don’t try to go back to an older script and change it, just write new one to undo the mistake.

Each script is saved in the ‘dbname.production’ folder with the following naming convention:

dbname.##.user.descr.sql

where:

dbname : the database to deploy it to (mostly so you can see it in your Query Analyzer title bar)

## : the sequence number to deploy it in (just increment the highest number)

user : initials of the programmer who wrote the script

descr : a brief description of what the script does

Here are some examples

HR.01.jrm.AdjustedTblEmployees_AddedStartDateColumn.sql

HR.02.jrm.AdjustedProc_sp_GetEmployees.sql

HR.03.jrm.AdjustedProc_sp_ActiveEmployees.sql

Step 3 – Number your scripts

As you may have noticed in step 2; scripts are numbered in the order they are created so object dependencies exist when run. Do you like dependent objects to exist? Yeah? … me too.

In case you’re wondering, the single digits are prefixed with ‘0’ so they will sort in Windows explorer properly. And there is only room for 2 digits since you will rarely if ever create more than 99 scripts before deploying.

Step 4 – Keep track of what has been deployed to where

Ok, so you’ve got your ‘dbname.production’ directory with 10 SQL files in there, you’ve got 3 developers each with their own copy of the database, a testing and/or business validation environment, and production.

Sooo … what’s been deployed to where? It’s pretty easy to lose track of what got deployed to validation last week. … Sorry … did we deploy 5? Or did we stop at 4?

I toyed with a few different ways of doing this, but finally settled on a very obvious and simple solution; keep an empty text file for each database, updating the number each time it’s run. Since I want them to sort intermingled with the script files, I use a similar naming convention:

dbname.##.zzz–DEPLOYED-TEST

Here’s some examples mixed in with the previous examples:

HR.00.zzz–DEPLOYED-DevBox2

HR.00.zzz–DEPLOYED-DevBox3

HR.01.jrm.AdjustedTblEmployees_AddedStartDateColumn.sql

HR.02.jrm.AdjustedProc_sp_GetEmployees.sql

HR.02.zzz–DEPLOYED-TEST

HR.03.jrm.AdjustedProc_sp_ActiveEmployees.sql

HR.03.zzz–DEPLOYED-DevBox1

Notice how they’re sorted amongst the scripts and it’s obvious what got deployed? I could probably delete the entire text of this post, other than that sample, and you could figure out my deployment process. … But since I enjoy typing … I’ll continue. 😉

When you finish executing your scripts, you would increment the number to reflect the last script run on that database.

Step 5 – Execute scripts in order

Obviously.

Step 6 – Post deployment clean up

When the scripts are deployed to production, you would create a new deployed.YYMMDD subdirectory with today’s date in the dbname.archive directory and move all the SQL files from the ‘dbname.production’ directory. Then Renumber the deployment marker files back to 00.

This can be incorporated into almost any database schema change deployment process, and isn’t specific to SQLServer. After all I started evolving this process while working on an Oracle database.

It has occurred to me that this may be an old school idea and modern database schema diff tools may provide a way to avoid this kind of preparation. But unless you are working as a sole developer; I can’t imagine a diff tool making your life easier than what I’ve outline above.

I hope your next deployment is less of a nail biter.

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

How to eliminate analysis paralysis

Over the first decade of my programming career, one trend became very obvious to me. I noticed that I could always increase my efficiency dramatically in the 11th hour before a deadline. It took a long time to see (like a decade), but I finally saw the truth…

I now know:

- Without the deadline; I had Analysis Paralysis

- Analysis Paralysis is caused by fear

- Analysis Paralysis is specifically caused by the fear of making decisions

In the 11th hour before a deadline, I made decisions immediately, where as without the deadline, I’d ponder endlessly. Once I realized this, it was very easy to fix; Get all the information, give myself a time limit (1-5 minutes), make a decision, and start.

This was an incredible productivity boost!

Here’s how I streamlined my own personal development process to ‘get all the information’:

- I list all my options for each design decision

- I pick the best option(s) based on pros/cons (may be more than one)

- I list the risks of the best option(s)

- Then for each risk, I design & write a ‘conclusive’ proof of concept

- If the proof of concepts prove it will NOT work, then I toss the idea, pick another one & repeat.

A few things to keep in mind:

- A ‘Proof Of Concept’ is a minimal app to prove something. (mine are usually 1-6hrs)

- If 2 or more options are equal, I give myself a time limit (1-5 minutes) and make a decision … any decision, and don’t look back.

- Trust yourself to be able to deal with any problems you hit which were not take into account at design time.

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

My name is John MacIntyre.

My name is John MacIntyre.