Hey #region haters; Why all the fuss?

I hear a lot of programmers saying #regions are a code smell. From programmers I don’t know to celebrity programmers, while the development community appears to be passionately split.*

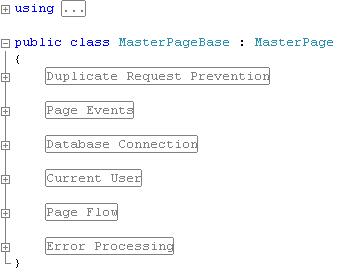

The haters definitely have a point about it being used to hide ugly code, but when I open a class and see this, it just looks elegant to me.

Now none of these regions are hiding thousands of lines of ugly code, actually, most of these regions contain only 3 properties and/or methods and the last curly brace is on line 299. So the whole thing with 17 properties and methods including comments and whitespace is only 300 LOC. … really, how much of a mess could I possibly be hiding?

To me, the only question is whether I should have this functionality in the ContainerPageBase or the MasterPageBase**.

You may also notice the regions I have are not of the Fields / Constructors / Events / Properties / Methods variety. It has taken some time for me to accept that all data members (aka fields) do not need to be at the top of the class as I was classically trained to do and that perhaps grouping them by functionality is a better idea. This philosophy only makes regions that much more valuable.

… is anybody still here? …. have I converted anyone? 😉

* These posts are fairly old, but in my experience in the developer community; the consensus hasn’t changed.

** The Database Connection & Current User regions may have some scratching their heads. There are valid reasons for them, however the Data Connection region will never be included at this level again. More on that in a future post.

Copyright © John MacIntyre 2010, All rights reserved

Large Application Estimation in 2 Weeks

This is post 2 from a 7 part series entitled Technical Achievements in my Last Project.

My role in this project started out by being asked to assess the existing project, provide insight into options to move it forward, with one of those options being a rewrite*. An estimation was needed for the rewrite option, so I was given 2 weeks to do it.

This post explains how I was able to pull off this massive estimation undertaking in a mere 2 weeks.

Ideally, the project documentation from the existing system could be used to give an excellent estimate, but this is a blog post, not a fairly tale. Or a thorough specification could have derived from an in depth analysis of the existing application, which business could have adjusted as needed, and used to conclude a reasonable estimate. But this is the real world, and this is a real business; and I was given a real (short) deadline.

Now I should also mention this wasn’t a 20 KLOC project, it was a fairly complex piece of software with over 500 KLOC** and almost 1800 database objects along with satellite applications. Everybody understood how this short timeframe severely limited the accuracy of anything I would be able to provide, but I was determined do the best job possible.

So my next goal was to figure out how to do a somewhat accurate estimate, provided the constraints, where I wouldn’t be setting myself up for a lynching at the end of it. I explored many different ways to get a rough idea about the entire projects scope.

This is what I finally settled on:

- Dumped all Microsoft Access Objects

First I modified an Access VBA script I found for exporting objects to text files and exported everything. - Dumped all database DDL

I wrote a little command line utility to loop through a SQL Server database, pull the DDL for each object using the sp_helptext stored procedure, and write it out to text files. - Created an analysis database

Created an analysis database primarily comprised of three tables; one for all the entities the application is comprised of, a second for linking which entity called which, and the third for linking menu items to all dependent forms. - Collected the names of all objects into the database

I wrote another little command line utility to read each code file dumped out in steps 1 & 2, and add the objects name and a few other statistics.*** - Determined all entity relationships

I wrote another command line utility which traversed each code file, reading in the code, and determining which of the known entities it was dependent upon. This information was stored in the second linking table in the analysis database. - Determined dependencies of each menu item

Wrote yet another command line application to traverse the dependencies to determine which menu item could eventually load which forms. Certain forms were ignored in the calculation including, a) previously calculated forms (obviously), b) menu item starting point forms, and c) specific forms which could load almost every other form in the application. - Ball park estimated each GUI component

Loaded each of the nearly 400 forms and 200 reports, and did ball park estimations on each one, deriving what business logic I could glean from the UI. I used the CRUDLAFS estimation technique to ensure I didn’t miss any basic functionality.

Other than trying to figure out how I would do the estimation, this was the most time consuming task. Just think, even at a mere 3 minutes per form, we are still talking 30 hours of tedious effort. - Totaled the estimates

- Menu estimate breakdown

In order to determine the time to replace one complete menu item with all functionality from that starting point, I needed to sum the estimates from all dependencies from that form onward. So I queried the times for each menu item starting point, summing all dependency estimates and added it to my report.

Now there are some serious issues with this strategy, like the high probability for; missed complexity, missed functionality, and just overall inaccuracies, but these issues were known and pointed out at the time with the estimate.

Was the estimate a success? Was it accurate? Honestly, I’d say it was a success, but it didn’t turn out to be accurate.

…. Wait! What?

How could an estimate be a success if it wasn’t accurate?

Well, let me revise that by saying some of the core underlying assumptions were changed dramatically 5 months into the project which increased complexity far beyond the simplistic web design the estimate was based on.

The big lesson learned from this task wasn’t so much about estimation as it was about managing requirements and sign off. …. But I digress. 😉

Anyway, I think the estimation I performed was well grounded in something, even if that something was not as thoroughly researched as would be ideal. I believe the executed strategy had a good return on investment.

This is post 2 from a 7 part series entitled Technical Achievements in my Last Project.

* For the record, I already had more consulting work than I could handle at the time, so while a rewrite was interesting, steering the client into an unwarranted rewrite was not beneficial for anybody.

** LOC sizes include comments, white space, and database object DML.

*** The other statistics, LOC, etc.., was actually one of my false starts in how to do this analysis.

Copyright © John MacIntyre 2010, All rights reserved

Technical Achievements in my Last Project

I’ve wanted to write this series for a long time, but hadn’t gotten around to it. Now, however, with my contract ending soon, I feel if I don’t write some of this down, I will never find the time, and it will be lost forever, which would be disappointing since I feel there are some really interesting things I did on this project which could benefit others.

This isn’t about the kludges needed to fix Microsoft’s dysfunctional webforms architecture to work the way I need it to. It’s not about how to fire a server side event from a client side created button or how to write JavaScript for an ASCX used multiple times on the same page, when you don’t know what the rendered ID will be, and it’s not about overcoming resistance created by bizarre vendor API paradigms or outright bugs. It’s about overcoming the big requirements challenges placed before me.

The project basically revolved around a significantly large and complex Microsoft Access application which had many issues. It was determined the best course of action was to rebuild it as an ASP.NET web application. However two important constraints placed upon me were; a) development could not be done in parallel, switching everybody over all at once upon completion, b) the new web application must be run from within the existing MS Access application and interact seamlessly until the MS Access app is completely replaced. The first constraint wasn’t a big deal until you consider the fact that the existing database was a total mess, needing refactoring, and the Access app was a spaghetti code nightmare where changes could potentially drag into eternity. It was wisely decided very early on that we would not do anything to upset the stability of the existing application.

The series will cover some of the more interesting things done on this project and will be over 7 parts:

- Introduction and Overview

Introduction to the series, brief run down of the general requirements and intention of the project.

- Large Application Estimation in 2 Weeks

How I assessed the condition of a very large and complex MS Access application with 540 KLOC and almost 1,800 database objects, and how I was able to provide a very rough estimate to its reconstruction with a 2 week deadline. I expect to be able to piece together how I did this from memory and rough notes I have, but if I’m unable to come up with something meaningful, this may get nixed.

- An Abstract Data Model

Because I did not want to unsettle the existing software and needed to keep the data in synch, while simultaneously refactoring the database and providing a good data model to serve as the foundation of the new application, the new data model was simulated. This was an interesting approach which I’ve never seen anybody else do.

- A User Configurable List Mini-Framework

Unnecessary and/or missing list columns came up repeatedly in conversations with users, so I designed a configurable, flexible, and extendable mini framework for quickly building data lists which allowed for user selected and positioned columns, advanced filtering, sorting, and exporting.

- Embedded Web App to MS Access App Communications

Communicating from client side JavaScript to the container Access application the webpage is being run within, was one of the more innovative solutions on this project. But what’s even more interesting was that I was able to un-intrusively inject the functionality with a simple 70 LOC JavaScript file which can be switched out to remove the functionality.

- The Plug In Architecture

Intrusive integration is a major problem, tying companies to specific vendors and creating unnecessary dependencies. I designed a simple plug in architecture to allow a developer to implement an interface, make one configuration change, and run without any changes to the underlying application.

- HTML Table Column Sizing similar to Excel

Users didn’t like the standard HTML tables and wanted Excel like column sizing functionality. Finding information on how to do this proved impossible, so I sat on it for a while and eventually created a small JavaScript function which adds column sizing to any HTML table without messing up existing cell editing script.

I hope you find this series beneficial. I expect to complete the next 6 posts over the next 2 weeks, but make no promises. I am currently looking for a new contract after all.

Copyright © John MacIntyre 2010, All rights reserved

The CRUDLAFS Technique for Software Estimation

I have always, like so many other programmers, had a problem with software estimation and costing out a software project. Most of my career was plagued by software estimates so bad that I made significantly less money on my independent projects than I did at my low paying day jobs. This was not the reason I was working through my vacations!

The turning point came when I realized the CRUD acronym encompassed most of the functionality of the line of business applications I was writing and could be used as a check list to ensure I wasn’t missing functionality. Later, this checklist was refined to CRUDLAFS.

Wait! What? CRUDLAFS? … did I just coin a new acronym? …. Hmmm, looks like I did!</egosmirk>

While I’m sure you are already familiar with the CRUD acronym, here is CRUDLAFS:

| Create | All functionality around validating and creating an entity |

| Read | All functionality around reading and displaying an entity |

| Update | All functionality around validating and updating an entity |

| Delete | All functionality around deleting an entity |

| List | All functionality around querying and displaying a list of entities |

| Additional | All additional functionality related to an entity |

| Filter | All functionality around filtering a list of entities |

| Sort | All functionality around sorting a list of entities |

I set up an Excel spreadsheet with CRUDLAFS as column headings, all my known or probable entities down the left, and probable hours to accomplish each in the cells, like so:

So in my example, the estimated time to create a list of orders is 5 hours.

And when I say known or probable entities, I mean; on smaller projects, I usually have a pretty good idea what all the entities will be, but on big projects, I charge hourly until requirements, data model, GUI design / functional specifications, and initial risk assessment is complete. Most larger projects just have too much back and forth communication and the customer usually doesn’t even have more than a vague idea of what they want. This was a very important distinction which has kept me out of bankruptcy.

I get the feeling this may not work well with agile approaches, but for Big Design Up Front projects, it has been a major leap for me.

Copyright © John MacIntyre 2010, All rights reserved

7 Features I Wish C# Had

A while ago I saw StackOverflow question What enhancements do you want for your programming language?. I was able to, to my surprise, actually come up with something, but to be honest I don’t really think very much about what in my current programming language should be changed. Don’t get me wrong, I bitched and moaned about Visual Basic for about a decade, but it wasn’t that I wanted VB ‘changed’; I just didn’t want to work in it at all. But I digress, actually, you may want to avoid me if I ever get on the topic.

This question about what I would like to see in a programming language is something I’ve thought about, since, but the only real features I can think of consist around building a domain specific language around a specific industry and methodology. I think it would be cool to build a language around a technical analysis technique called Elliot Wave for example.

More recently, I’ve heard Jeff Atwood assert, on the StackOverflow podcast, that any good programmer can whip off 10 things they hate about their favorite programming language in a flash.*

I couldn’t come up with 10 things I hate, so I’m going to settle for 7 features I’d like to see. There may be good reasons why we don’t have some of them, but here’s my list anyway:

1. Parameter constraints

One of the first things I learned to do when I started programming, is to validate the parameters. This has saved me untold hours of debugging and I currently start every method with something like:

void DoSomething(float percentage, string userName)

{

if (0 > percentage || 100 < percentage)

throw new ArgumentOutOfRangeException();

if (string.IsNullOrEmpty(userName))

throw new ArgumentNullException();

if (0 > userName.Length)

throw new ArgumentNullException();

if (userName.Equals("Guest"))

throw new ArgumentException("User must log in.");

// .... do stuff

}

This is in almost every method. So why can’t the language do this for me automatically?

Yes, I know about declarative programming, but isn’t that just pushing it into the attributes? Where a 3rd party tool will process it?

What I’m suggesting is something similar to the generics keyword ‘where’. Why not have something like this:

void DoSomething(float percentage where 0 <= percentage && 100 >= percentage,

string userName where !string.IsNullOrEmpty(userName) && 20 >= userName.Length && !"Guest".Equals(userName))

{

// .... do stuff

}

Really complex validation rules could be separated into its own function or you could always fall back to validation in the body of the method the way we do it now..

2. An ‘IN’ operator

I find the following code a bit redundant:

if (x == 1 || x == 2 || x == 3 || x == 5 || x == 8 || x == 13)

{

// .... do stuff

}

I’d prefer to borrow the SQL keyword and just write

if (x in (1, 2, 3, 5, 8, 13))

{

// .... do stuff

}

EDIT: Apparently SecretGeek already created a class for SQL Style Extensions for C# which does exactly what I wanted (for strings anyway). Thanks Darrel Miller for the link.

3. A 3 way between operator

I’ve always wished we could replace

if (fiscalYearStartDate <= currentDate && currentDate <= fiscalYearEndDate)

{

// ... do stuff

}

with

if (fiscalYearStartDate <= currentDate <= fiscalYearEndDate)

{

// ... do stuff

}

4. Only allow var on true anonymous types

var adds awesome functionality to C#, like the ability to create anonymous types from an expression. However, when I start seeing code where all the variables are typed ‘var‘ I get a little worried.

Now I know about type inference and that var is the same at run time as explicitly stating the type, but when you want to look up what the heck variable x is; it’d be nice if the variable definition would tell me

ObjectX v = new ObjectX();

instead of

var v = new ObjectX();

I also realize, I can figure out the type by looking on the right side of the assignment operator and in the above example above it’s pretty darn clear exactly what type v is. But what if the expression is a method?

var v = DoSomething();

Now I’ve got to look up the definition of DoSomething().

Not a big deal, but you’re already 2 definitions away from your core task. This is needless resistance as far as I’m concerned.

Well, you say, I could just use intellisense to hover over the method to get the method’s return type. That is true, because, thank god, the return type for a method (along with parameters) requires the type be specified, so I can get the method type from intellisense with only one definition source code jump. However, this logic is flawed because if the type was used instead of var, intellisense would have told me without any source code definition jumps what the type was. Rather than the useless “(local variable) object v” tip I receive with it.

You know, I am pretty anal about this type of thing, and NO, I haven’t had a real world problems yet, but it just feels wrong.

5. Constant methods and properties

One of the features I always used when I coded in C++ was constant functions. The great thing about constant functions is they prevent side effects. So you know if someone, like yourself, alters functionality which is not expected to change anything, has a side effect, it won’t compile and will have to be dealt with.

This isn’t the most popular functionality in C++ and while this concept helped me write bullet proof code on my own, when I started working on a team, they weren’t very thrilled with the keyword and quickly removed it. But hey, that’s kind of a tell isn’t it?

To be honest, I don’t usually have problems like this with projects I initiate. This may be irrelevant with good design, but I would like the peace of mind in having it anyway.

6. JIT properties without a local variable to cache the value

Remember how you’d need to create a local variable for every property you create? Like:

private int _id = 0;

public int Id

{

get {return _id;}

set {_id = value;}

}

Which became

public int Id { get; set;}

But what about properties with JIT functionality or have a side effect? Something like this, seems awfully unfair:

private int _nextId = 0;

public int NextId

{

get {return _nextId++;}

set {_nextId = value;}

}

Why not have something like a thisProperty keyword? Something like :

public int NextId

{

get {return thisProperty++;}

set;

}

7. Warn command

I’m not talking about the preprocessor #warning. I want something like throw, but without interrupting program flow.

Why? Lets say your data access layer pulls a null or otherwise unexpected value out of a database for a value which should have a very specific set of values.**

This is recoverable and no big deal, you use a default value, but you might like to somehow warn the user this was done. So; you can’t throw an exception since that would interrupt your program flow, I can’t think of any framework component you could add***, you could add some external dependency I suppose, you can build your own external dependency which all future apps will require, you cannot use one of the GUI level features like Session since it wouldn’t be available from the DAL DLL level, and you definitely don’t want to add parameters to every method so you can pass some warning collection up & down the stack!

But something like a warn command looks awfully elegant!

warn new UnexpectedDataWarning("Unexpected status code. Set to 'Open'.");

Then in the GUI level, you can traverse the warnings collection and display them to the user.

* I can’t figure out which episode it was, so no link. Please comment if you know. Thanks.

** Yes, the database should have constraints, but sometimes things are not under your control or perhaps you have a logical reason to not create constraints … but that’s another post … probably early next week.

*** At least I don’t know of anything. Please let me know if there is something built into core framework which would allow this.

Copyright © John MacIntyre 2010, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

When to start looking for another job

Last week I listened to Seth Godin’s audiobook Linchpin. It really resonated with me and I had a few significant insights.

Last week I listened to Seth Godin’s audiobook Linchpin. It really resonated with me and I had a few significant insights.

One of the interesting ideas he promoted was the idea of ‘gifts’. A gift as he explains it, is any additional work above and beyond what is required as part of the ‘transaction’. The transaction is fulfilling your end of the bargain for their end of the bargain. Seth Godin explains a transaction as

If I sell you something, we exchange items of value. You give me money, I give you stuff, or a service. The deal is done. We’re even. Even steven, in fact.

And he explains a gift as

If I give you something, or way more than you paid for, an imbalance is created.

Lets say a client is having an issue and after some digging, you have an insight where a slight change not only resolves the current problem, but prevents a similar problem from occurring throughout the entire application. The client did not offer to pay for it, and you can’t charge them, as a matter of fact, if you are a consultant, it will reduce future billable work from the client to fix the future problem. The insight and change is a gift.*

Seth continues with regards to the gift:

That imbalance must be resolved.

So how is this imbalance resolved? … Appreciation

Yep … that’s it.

If you have a particularly astute client/employer, you may receive additional work and referrals as a consultant, or a bonus, raise, and/or promotion as an employee, but these are peripheral. Appreciation is the critical element for the recipient to experience**. If your gifts are not appreciated, the client/employer does not value what you have to offer and both of you should seek out more compatible relationships.

So why give a gift? Personally, I give gifts because I want to create the best software I am capable of creating. Creating beautiful software, not just meeting requirements, is the very nature of craftsmanship. Beautiful software is a gift. I can’t write software without gifts. I am actually repulsed by the thought of merely delivered what was asked for since the requirements are always missing something. Yes I’m repulsed. It reminds me of those Mad magazine comics; ‘If kids designed their own xmas toys’. With few exceptions, the results would be horrendous!

Unfortunately, I’ve noticed a downward cycle with the reception of my software gifts. I’ve noticed when starting a new position, gifts are recognized and appreciated. You are ‘the man’ (or woman) and everybody is ecstatic with every gift. However the appreciation always seems to dissipate. Maybe it’s a ‘familiarity breeds contempt’ type of thing, or perhaps it’s just me (but I don’t think it is).

I believe there are 5 phases of perception regarding the receipt of your gifts:

- Worshiped – You are new with the client/employer and your gifts are truly unexpected, recognized as such, and are appreciated. You get thanked a lot by every recipient.

- Valued – You’ve been here for a while, and although these above and beyond tasks are appreciated, they’re not exactly unexpected anymore. You don’t get thanked much, but they realize you are a valued service provider.

- Unappreciated – Your gifts are expected and/or unrecognized, but unappreciated any way you slice it.

- Tolerated – Your gifts are viewed as a time consuming waste of effort, but tolerated. You continue to provide them out of a desire to do a good job.

- Rejected – Your gifts are rejected and no longer tolerated. Every suggestion of doing something with additional benefit is rejected.

In my opinion, the transition from Worshiped to Valued is normal, expected, and even desired. People can’t run around thanking you for the rest of your life, nor would you want them to. It might get a little creepy. 😉

The transition from Valued to Unappreciated is your cue to leave. This is a downward slide, and it’s unlikely that things are ever going to move back up to Valued***. You are in a great position to find other work, you have plenty of time to find the ideal next job or project, and you are leaving on a high note with a favorable memory still in their minds. However, you do need to be objective in your observation, your gifts may still be recognized and appreciated, but the feedback you are receiving is based on another pressing issue at the company and is misleading.

If you’ve moved to Tolerated or Rejected; you have completely missed your cue to leave and there is an obviously serious disconnect between what you are offering as a gift and what management perceives as valued. Regardless of the reason, both you and the client/employer might need to seek more compatible relationships***.

So what if the problem is not in the ‘perception’, but you have actually become complacent and are no longer delivering the gifts? If this is the case, you had better get back on track, because these gifts are your value added proposition and the only thing separating you from the lowest cost outsourcing alternative.

EDIT (03/08/2010): Ben Alabaster added a great comment about how an IT department being viewed as a Cost Center or Profit Center will impact on how your gifts are perceived.

*This is not a gift if the change took a significantly larger amount of time which the client did not agree to.

** The appreciation does not have to be communicated, but it must be felt by the recipient.

*** It has occurred to me that gifts could be ‘adjusted’ to more closely align with what management values. However, I think this idea is flawed since your ‘gift’ is your best ideas at improving the software, where as management is mostly interested in features, which is completely different.

Copyright © John MacIntyre 2010, All rights reserved

My name is John MacIntyre.

My name is John MacIntyre.