An Abstract Data Model

This is post 3 from a 7 part series entitled Technical Achievements in my Last Project.

Overview

Overview

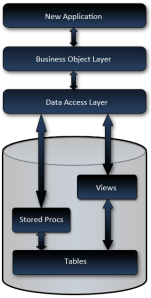

Normally, when I build a new system, I design the new data model based on the requirements, and build my business objects and data access, based primarily on a that data model*. The remainder of the application is built on the components beneath it, so when you change something at the bottom, like the data model, changes ripple throughout the application. The data model serves as the foundation of my application.

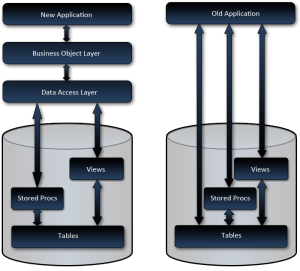

Now as far as this project goes, one of the important requirements was to deliver the new system incrementally, while leaving the older system to run in parallel until completely replaced.

Parallel Data Models

This presented a bit of a dilemma for me since the current database was … well … lacking, and I was planning to refactor it enough to make it a very unstable foundation for the old system. I wanted to refactor it for a number of reasons including; missing primary keys, no foreign keys, no constraints, data fields which were required but not there, data fields which were there but not used, data fields containing 2 or more pieces of information, and tables which should have been multiple tables. Not to mention the desire to achieve a consistent naming convention without the insane column names using characters like ‘/’ and ‘?’ … seriously.

However the parallel systems requirement caused a bit of a dilemma. I mean, how do you manage parallel systems, one of which needs a stable foundation, and the other is so temperamental that you don’t want to touch it.

My options as I saw them were something like:

My options as I saw them were something like:

- Scrap the data model refactoring.

This really didn’t get much thought. Well it did, but the thought was, is this the best route for the client? And if so, should I offer to help them find my replacement or just leave? I definitely wasn’t up for replacing one unmaintainable piece of junk for another. - New data model and re-factor the existing app.

The existing application was a total nightmare built in classic Access spaghetti code fashion. Just touching that looked like going down a rabbit hole of certain doom. - New application on the old data model and refactor the data model later.

This would have caused a real disconnect between the data model and the application. I’m not sure if the data model and application ever would have lined up properly. Not to mention the clients probable later decision of not completing that part of the project since everything worked. This seemed like a very bad idea. - Build a parallel data model for the new system, while leaving the old system as is.

From a development point of view, this seemed like the best alternative, but keeping an active database in sync presented a serious, possibly unconquerable, challenge.

The final option of refactoring the data model immediately and basing all construction on a solid foundation was definitely the most appealing. But how do we keep it in synch? I’m sure there are tools out there for that, but with a possibly dramatically different data model? With active live data? Even if there are tools, I doubt the price would have been within the project’s budget**. And if it did exist how would we bring concurrency issues back to the users who caused the conflict?

Abstract Data Model

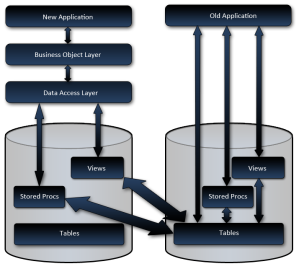

That’s when I had the idea; Why not just build an abstraction layer on the database? Why not manage the data all in one database while abstracting out the other data model? Why not build a simulated data model? Why not just redirect all my views and procs to the other database?

This was so bloody simple. Why hadn’t I ever heard of anybody else doing this before?

So the plan was to refactor the data model, build a concrete database, and instead of having stored procedures and views pointing to the tables like it was meant to, they would point to the tables in the other database. All changes would proceed as usual, for example; if the client had a change request which required a new field in a table, it would be added to the physical table, views and stored procedures would be updated, and the applications would change to accommodate. And when the old system was completely replaced, all that would need to be done, is to rewrite the DML to direct to the current system. Even the data transition would be easier since we’d already have views aggregating data in the expected format!

So the plan was to refactor the data model, build a concrete database, and instead of having stored procedures and views pointing to the tables like it was meant to, they would point to the tables in the other database. All changes would proceed as usual, for example; if the client had a change request which required a new field in a table, it would be added to the physical table, views and stored procedures would be updated, and the applications would change to accommodate. And when the old system was completely replaced, all that would need to be done, is to rewrite the DML to direct to the current system. Even the data transition would be easier since we’d already have views aggregating data in the expected format!

I was pretty excited about this when I designed it and told a few developer friends, who thought it was either stupid idea, problem ridden, or pointless at best. Now I do have a lot of stupid and pointless ideas, but didn’t feel like this was one of them.

Implementation Challenges

So how did I implement it?

Well once the new data model was finished, I wrote the views and stored procedures, as you might expect, but at this point you run into the following challenges:

- Required data missing from the existing database

For example; A create date for products so business knows when a product was added to the system. - Existing data in old system requires new values.

For example; An order has a boolean status field for ‘pending’ & ‘completed’, but business requires status’s to be changed to ‘pending’, ‘ordered’, ‘shipped’ - Non-existing data tables need to be simulated

For example; Lets say business wants the user to be able to request product literature on the order with regular products, you’ll need to simulate orders for product literature ordered via the old system.

The non-existing data tables were easily simulated with a view. However, these often came with a performance penalty. This is one of the few cases where the new application needed minor modifications to get around. Basically, different views were created for different situations, and the data access component would select the most appropriate view based on the circumstance.

Extension Tables

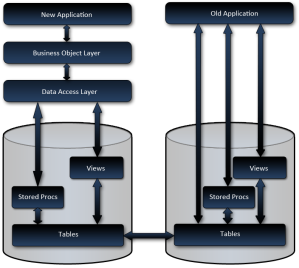

The missing required data and data changes (like status codes) were handled with extension tables.

So if I had a table named ‘order’ for example, I would create a new table called ‘order_x’, with a matching primary key column, plus columns for data that was required but missing, and data which required changing. Then insert, update, and delete triggers would be added to the ‘order’ table so changes from the old system would keep the extension table up to date. And procs and views on the new system would join the 2 tables to represent it as a cohesive unit.

If the current fields required value changes and/or new values, the new values would be stored in a field in the extension tables, and the update trigger on the main table would update the status when it changed from the old system. In situations where the data did not synch up 1 to 1, certain column mapping rules would be used. To extend on the order status example; ‘pending’ in the old system is the same as ‘pending’ in the new system, but what about ‘completed’? Is that ‘ordered’ or ‘shipped’? It might be mapped so if the old system updates the order to ‘completed’, it would change the extension table to ‘ordered’, and if the new system updated the status to either ‘ordered’ or ‘shipped’, the ‘order’ table status would be updated to ‘completed’.

The Dirty Data Problem

But the biggest problem was dirty data. This was a killer! This is the one challenge which plagued us throughout the entire project and knocked us off our schedule continuously. Because the old system was still being used, which offered the users absolutely no restrictions; we were getting situations which never could have been predicted. This was causing the application to act in unexpected ways, and even after making changes to accommodate the dirty data, we received endless support inquiries on unexpected behavior caused by null data and unexpected values.

There were changes to the application based on this as well. We actually had to change our business objects to set default enum values and make most properties nullable types, even though in the new data model, they were not nullable. This doesn’t effect input, but anywhere that data was being read from the database, we had to accommodate it. These nullable types will not require changing when the old system is completely replaced, but they do represent a smell which I hope somebody will eventually eliminate.

Conclusion

Overall though, I’d say this strategy was an overwhelming success. Other than the dirty data issue, which still rears it’s head every now and again, there have been no problems since it was first deployed.

If you can get away from a parallel deployment, I would recommend doing so, but if you can’t, I really think this strategy is a good one.

EDIT: After I posted this, it occurred to me that this strategy really cost almost nothing, since the biggest costs were in the setting up the views to extract the data out of the system in the expected format, which would have needed to be done when the data was moved to the new system anyway. The only real extra work was the extension tables and abstract procs, neither of which were very difficult once the mapping was established in the views. My colleague Ben Alabaster also pointed out that even if we bought an overpriced synch tool; configuration of the tool would have taken longer to setup than my solution.

This is post 3 from a 7 part series entitled Technical Achievements in my Last Project.

Credit-Thank you Ben Alabaster for the illustrations.

* I need a pretty good reason to build a data model and object model that are different. I’ve have done it, but its rare to have a compelling enough reason.

** At the time I wasn’t aware of any tools to do this. Karen Lopez was kind enough to let me know that TIBCO & Informatica may have done the job, but are expensive. From what I can tell, these tools would have been more expensive than the strategy I implemented. Thanks Karen.

Copyright © John MacIntyre 2010, All rights reserved

10 things to review after finishing your data model

When I finish modeling my database, I like to just let it sit a couple days, then spend some time just reviewing it to check for inconsistencies. The kind of inconsistencies you never notice when you’re up to your eyeballs in details, but drive you up the wall after 5 years of maintenance.

When I finished my database design a few days ago, I jotted a few things down to remember to do. But when I transcribed them into my bug tracker, where I manage all my tasks, 3 things turned into 5, then 7 and I realized if I could just add a few more, I’d have an infamous ‘Top 10’ list.

Anyway here it is. It’s not exactly comprehensive, but it’s a start.

10 things to review after finishing your data model:

- Naming consistencies

- Column default consistencies

- Identity / auto-number technique exists

- Constraint consistencies

- Foreign Key relationships exist

- Indexes on Foreign Key columns

- Abbreviations are consistent

- Abbreviations are documented

- Data type and size consistencies. (For example; TableA.UserName is nvarchar(N), so TableB.UserName should be nvarchar(N) as well, not nvarchar(N±X))

- Review all requirements again to reconfirm everything was covered

My name is John MacIntyre.

My name is John MacIntyre.