An Abstract Data Model

This is post 3 from a 7 part series entitled Technical Achievements in my Last Project.

Overview

Overview

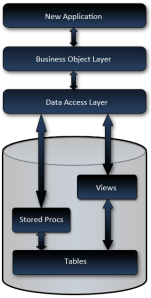

Normally, when I build a new system, I design the new data model based on the requirements, and build my business objects and data access, based primarily on a that data model*. The remainder of the application is built on the components beneath it, so when you change something at the bottom, like the data model, changes ripple throughout the application. The data model serves as the foundation of my application.

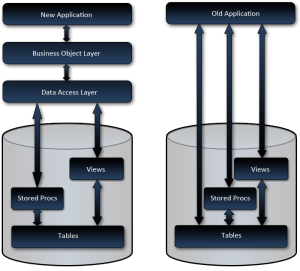

Now as far as this project goes, one of the important requirements was to deliver the new system incrementally, while leaving the older system to run in parallel until completely replaced.

Parallel Data Models

This presented a bit of a dilemma for me since the current database was … well … lacking, and I was planning to refactor it enough to make it a very unstable foundation for the old system. I wanted to refactor it for a number of reasons including; missing primary keys, no foreign keys, no constraints, data fields which were required but not there, data fields which were there but not used, data fields containing 2 or more pieces of information, and tables which should have been multiple tables. Not to mention the desire to achieve a consistent naming convention without the insane column names using characters like ‘/’ and ‘?’ … seriously.

However the parallel systems requirement caused a bit of a dilemma. I mean, how do you manage parallel systems, one of which needs a stable foundation, and the other is so temperamental that you don’t want to touch it.

My options as I saw them were something like:

My options as I saw them were something like:

- Scrap the data model refactoring.

This really didn’t get much thought. Well it did, but the thought was, is this the best route for the client? And if so, should I offer to help them find my replacement or just leave? I definitely wasn’t up for replacing one unmaintainable piece of junk for another. - New data model and re-factor the existing app.

The existing application was a total nightmare built in classic Access spaghetti code fashion. Just touching that looked like going down a rabbit hole of certain doom. - New application on the old data model and refactor the data model later.

This would have caused a real disconnect between the data model and the application. I’m not sure if the data model and application ever would have lined up properly. Not to mention the clients probable later decision of not completing that part of the project since everything worked. This seemed like a very bad idea. - Build a parallel data model for the new system, while leaving the old system as is.

From a development point of view, this seemed like the best alternative, but keeping an active database in sync presented a serious, possibly unconquerable, challenge.

The final option of refactoring the data model immediately and basing all construction on a solid foundation was definitely the most appealing. But how do we keep it in synch? I’m sure there are tools out there for that, but with a possibly dramatically different data model? With active live data? Even if there are tools, I doubt the price would have been within the project’s budget**. And if it did exist how would we bring concurrency issues back to the users who caused the conflict?

Abstract Data Model

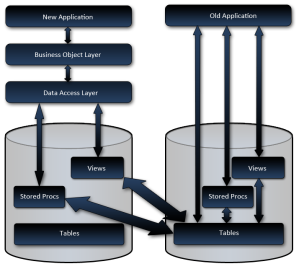

That’s when I had the idea; Why not just build an abstraction layer on the database? Why not manage the data all in one database while abstracting out the other data model? Why not build a simulated data model? Why not just redirect all my views and procs to the other database?

This was so bloody simple. Why hadn’t I ever heard of anybody else doing this before?

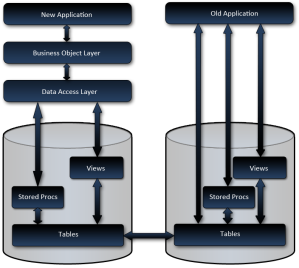

So the plan was to refactor the data model, build a concrete database, and instead of having stored procedures and views pointing to the tables like it was meant to, they would point to the tables in the other database. All changes would proceed as usual, for example; if the client had a change request which required a new field in a table, it would be added to the physical table, views and stored procedures would be updated, and the applications would change to accommodate. And when the old system was completely replaced, all that would need to be done, is to rewrite the DML to direct to the current system. Even the data transition would be easier since we’d already have views aggregating data in the expected format!

So the plan was to refactor the data model, build a concrete database, and instead of having stored procedures and views pointing to the tables like it was meant to, they would point to the tables in the other database. All changes would proceed as usual, for example; if the client had a change request which required a new field in a table, it would be added to the physical table, views and stored procedures would be updated, and the applications would change to accommodate. And when the old system was completely replaced, all that would need to be done, is to rewrite the DML to direct to the current system. Even the data transition would be easier since we’d already have views aggregating data in the expected format!

I was pretty excited about this when I designed it and told a few developer friends, who thought it was either stupid idea, problem ridden, or pointless at best. Now I do have a lot of stupid and pointless ideas, but didn’t feel like this was one of them.

Implementation Challenges

So how did I implement it?

Well once the new data model was finished, I wrote the views and stored procedures, as you might expect, but at this point you run into the following challenges:

- Required data missing from the existing database

For example; A create date for products so business knows when a product was added to the system. - Existing data in old system requires new values.

For example; An order has a boolean status field for ‘pending’ & ‘completed’, but business requires status’s to be changed to ‘pending’, ‘ordered’, ‘shipped’ - Non-existing data tables need to be simulated

For example; Lets say business wants the user to be able to request product literature on the order with regular products, you’ll need to simulate orders for product literature ordered via the old system.

The non-existing data tables were easily simulated with a view. However, these often came with a performance penalty. This is one of the few cases where the new application needed minor modifications to get around. Basically, different views were created for different situations, and the data access component would select the most appropriate view based on the circumstance.

Extension Tables

The missing required data and data changes (like status codes) were handled with extension tables.

So if I had a table named ‘order’ for example, I would create a new table called ‘order_x’, with a matching primary key column, plus columns for data that was required but missing, and data which required changing. Then insert, update, and delete triggers would be added to the ‘order’ table so changes from the old system would keep the extension table up to date. And procs and views on the new system would join the 2 tables to represent it as a cohesive unit.

If the current fields required value changes and/or new values, the new values would be stored in a field in the extension tables, and the update trigger on the main table would update the status when it changed from the old system. In situations where the data did not synch up 1 to 1, certain column mapping rules would be used. To extend on the order status example; ‘pending’ in the old system is the same as ‘pending’ in the new system, but what about ‘completed’? Is that ‘ordered’ or ‘shipped’? It might be mapped so if the old system updates the order to ‘completed’, it would change the extension table to ‘ordered’, and if the new system updated the status to either ‘ordered’ or ‘shipped’, the ‘order’ table status would be updated to ‘completed’.

The Dirty Data Problem

But the biggest problem was dirty data. This was a killer! This is the one challenge which plagued us throughout the entire project and knocked us off our schedule continuously. Because the old system was still being used, which offered the users absolutely no restrictions; we were getting situations which never could have been predicted. This was causing the application to act in unexpected ways, and even after making changes to accommodate the dirty data, we received endless support inquiries on unexpected behavior caused by null data and unexpected values.

There were changes to the application based on this as well. We actually had to change our business objects to set default enum values and make most properties nullable types, even though in the new data model, they were not nullable. This doesn’t effect input, but anywhere that data was being read from the database, we had to accommodate it. These nullable types will not require changing when the old system is completely replaced, but they do represent a smell which I hope somebody will eventually eliminate.

Conclusion

Overall though, I’d say this strategy was an overwhelming success. Other than the dirty data issue, which still rears it’s head every now and again, there have been no problems since it was first deployed.

If you can get away from a parallel deployment, I would recommend doing so, but if you can’t, I really think this strategy is a good one.

EDIT: After I posted this, it occurred to me that this strategy really cost almost nothing, since the biggest costs were in the setting up the views to extract the data out of the system in the expected format, which would have needed to be done when the data was moved to the new system anyway. The only real extra work was the extension tables and abstract procs, neither of which were very difficult once the mapping was established in the views. My colleague Ben Alabaster also pointed out that even if we bought an overpriced synch tool; configuration of the tool would have taken longer to setup than my solution.

This is post 3 from a 7 part series entitled Technical Achievements in my Last Project.

Credit-Thank you Ben Alabaster for the illustrations.

* I need a pretty good reason to build a data model and object model that are different. I’ve have done it, but its rare to have a compelling enough reason.

** At the time I wasn’t aware of any tools to do this. Karen Lopez was kind enough to let me know that TIBCO & Informatica may have done the job, but are expensive. From what I can tell, these tools would have been more expensive than the strategy I implemented. Thanks Karen.

Copyright © John MacIntyre 2010, All rights reserved

Large Application Estimation in 2 Weeks

This is post 2 from a 7 part series entitled Technical Achievements in my Last Project.

My role in this project started out by being asked to assess the existing project, provide insight into options to move it forward, with one of those options being a rewrite*. An estimation was needed for the rewrite option, so I was given 2 weeks to do it.

This post explains how I was able to pull off this massive estimation undertaking in a mere 2 weeks.

Ideally, the project documentation from the existing system could be used to give an excellent estimate, but this is a blog post, not a fairly tale. Or a thorough specification could have derived from an in depth analysis of the existing application, which business could have adjusted as needed, and used to conclude a reasonable estimate. But this is the real world, and this is a real business; and I was given a real (short) deadline.

Now I should also mention this wasn’t a 20 KLOC project, it was a fairly complex piece of software with over 500 KLOC** and almost 1800 database objects along with satellite applications. Everybody understood how this short timeframe severely limited the accuracy of anything I would be able to provide, but I was determined do the best job possible.

So my next goal was to figure out how to do a somewhat accurate estimate, provided the constraints, where I wouldn’t be setting myself up for a lynching at the end of it. I explored many different ways to get a rough idea about the entire projects scope.

This is what I finally settled on:

- Dumped all Microsoft Access Objects

First I modified an Access VBA script I found for exporting objects to text files and exported everything. - Dumped all database DDL

I wrote a little command line utility to loop through a SQL Server database, pull the DDL for each object using the sp_helptext stored procedure, and write it out to text files. - Created an analysis database

Created an analysis database primarily comprised of three tables; one for all the entities the application is comprised of, a second for linking which entity called which, and the third for linking menu items to all dependent forms. - Collected the names of all objects into the database

I wrote another little command line utility to read each code file dumped out in steps 1 & 2, and add the objects name and a few other statistics.*** - Determined all entity relationships

I wrote another command line utility which traversed each code file, reading in the code, and determining which of the known entities it was dependent upon. This information was stored in the second linking table in the analysis database. - Determined dependencies of each menu item

Wrote yet another command line application to traverse the dependencies to determine which menu item could eventually load which forms. Certain forms were ignored in the calculation including, a) previously calculated forms (obviously), b) menu item starting point forms, and c) specific forms which could load almost every other form in the application. - Ball park estimated each GUI component

Loaded each of the nearly 400 forms and 200 reports, and did ball park estimations on each one, deriving what business logic I could glean from the UI. I used the CRUDLAFS estimation technique to ensure I didn’t miss any basic functionality.

Other than trying to figure out how I would do the estimation, this was the most time consuming task. Just think, even at a mere 3 minutes per form, we are still talking 30 hours of tedious effort. - Totaled the estimates

- Menu estimate breakdown

In order to determine the time to replace one complete menu item with all functionality from that starting point, I needed to sum the estimates from all dependencies from that form onward. So I queried the times for each menu item starting point, summing all dependency estimates and added it to my report.

Now there are some serious issues with this strategy, like the high probability for; missed complexity, missed functionality, and just overall inaccuracies, but these issues were known and pointed out at the time with the estimate.

Was the estimate a success? Was it accurate? Honestly, I’d say it was a success, but it didn’t turn out to be accurate.

…. Wait! What?

How could an estimate be a success if it wasn’t accurate?

Well, let me revise that by saying some of the core underlying assumptions were changed dramatically 5 months into the project which increased complexity far beyond the simplistic web design the estimate was based on.

The big lesson learned from this task wasn’t so much about estimation as it was about managing requirements and sign off. …. But I digress. 😉

Anyway, I think the estimation I performed was well grounded in something, even if that something was not as thoroughly researched as would be ideal. I believe the executed strategy had a good return on investment.

This is post 2 from a 7 part series entitled Technical Achievements in my Last Project.

* For the record, I already had more consulting work than I could handle at the time, so while a rewrite was interesting, steering the client into an unwarranted rewrite was not beneficial for anybody.

** LOC sizes include comments, white space, and database object DML.

*** The other statistics, LOC, etc.., was actually one of my false starts in how to do this analysis.

Copyright © John MacIntyre 2010, All rights reserved

Technical Achievements in my Last Project

I’ve wanted to write this series for a long time, but hadn’t gotten around to it. Now, however, with my contract ending soon, I feel if I don’t write some of this down, I will never find the time, and it will be lost forever, which would be disappointing since I feel there are some really interesting things I did on this project which could benefit others.

This isn’t about the kludges needed to fix Microsoft’s dysfunctional webforms architecture to work the way I need it to. It’s not about how to fire a server side event from a client side created button or how to write JavaScript for an ASCX used multiple times on the same page, when you don’t know what the rendered ID will be, and it’s not about overcoming resistance created by bizarre vendor API paradigms or outright bugs. It’s about overcoming the big requirements challenges placed before me.

The project basically revolved around a significantly large and complex Microsoft Access application which had many issues. It was determined the best course of action was to rebuild it as an ASP.NET web application. However two important constraints placed upon me were; a) development could not be done in parallel, switching everybody over all at once upon completion, b) the new web application must be run from within the existing MS Access application and interact seamlessly until the MS Access app is completely replaced. The first constraint wasn’t a big deal until you consider the fact that the existing database was a total mess, needing refactoring, and the Access app was a spaghetti code nightmare where changes could potentially drag into eternity. It was wisely decided very early on that we would not do anything to upset the stability of the existing application.

The series will cover some of the more interesting things done on this project and will be over 7 parts:

- Introduction and Overview

Introduction to the series, brief run down of the general requirements and intention of the project.

- Large Application Estimation in 2 Weeks

How I assessed the condition of a very large and complex MS Access application with 540 KLOC and almost 1,800 database objects, and how I was able to provide a very rough estimate to its reconstruction with a 2 week deadline. I expect to be able to piece together how I did this from memory and rough notes I have, but if I’m unable to come up with something meaningful, this may get nixed.

- An Abstract Data Model

Because I did not want to unsettle the existing software and needed to keep the data in synch, while simultaneously refactoring the database and providing a good data model to serve as the foundation of the new application, the new data model was simulated. This was an interesting approach which I’ve never seen anybody else do.

- A User Configurable List Mini-Framework

Unnecessary and/or missing list columns came up repeatedly in conversations with users, so I designed a configurable, flexible, and extendable mini framework for quickly building data lists which allowed for user selected and positioned columns, advanced filtering, sorting, and exporting.

- Embedded Web App to MS Access App Communications

Communicating from client side JavaScript to the container Access application the webpage is being run within, was one of the more innovative solutions on this project. But what’s even more interesting was that I was able to un-intrusively inject the functionality with a simple 70 LOC JavaScript file which can be switched out to remove the functionality.

- The Plug In Architecture

Intrusive integration is a major problem, tying companies to specific vendors and creating unnecessary dependencies. I designed a simple plug in architecture to allow a developer to implement an interface, make one configuration change, and run without any changes to the underlying application.

- HTML Table Column Sizing similar to Excel

Users didn’t like the standard HTML tables and wanted Excel like column sizing functionality. Finding information on how to do this proved impossible, so I sat on it for a while and eventually created a small JavaScript function which adds column sizing to any HTML table without messing up existing cell editing script.

I hope you find this series beneficial. I expect to complete the next 6 posts over the next 2 weeks, but make no promises. I am currently looking for a new contract after all.

Copyright © John MacIntyre 2010, All rights reserved

AboutMe.xml

I have a pet peeve and like most pet peeves it’s an irrelevant petty little annoyance, not quite a huge, humanity, oppressing problem.

My pet peeve is filling out the same information; name, address, city, etc… on paper forms. All that standard information at every doctor’s office, school, activity registration form for my kids, etc… I mean why do I need to keep writing this stuff? And why does somebody else have to take the time to retype it into their system?

Really! In all seriousness … what a waste of time! 5 minutes I’ll never get back, every time I start a new relationship with any organization.

But wait … I have a vision! Not a big glorious, save humanity vision, it’s more of a save each person 5 minutes of writers cramp, kind of vision. Yes! That kind of glorious vision!

I was originally inspired with this in the mid 1990’s. It started out as a question; why can’t doctor’s receptionist retrieve this information from the province when they scan my health card. But since the likelihood of getting the government to add an API for this is slim, it was reduced to something simpler. Like; Why can’t I hand the receptionist at my new doctor a diskette with an ‘aboutme.txt’ file on it, where she can load it into her PC, and give me my diskette back? This would free me up to spend an extra 3-5 minutes browsing the 4 year old magazines during my 76 minute wait to see the doctor.

Over the years, this vision has transformed from an aboutme.txt file on a 3.5” diskette to an aboutme.ini file on a diskette to an aboutme.ini file on a website to an aboutme.html file on website to an aboutme.xml file on a website to an aboutme.xml file on a USB memory stick. I’m not even going to go into ideas I had for RFID, bar coding, or carrying around printed labels in my wallet.

I’ll agree; this isn’t a big problem, but it’s an irritating little annoyance which can be easily overcome with a very simple programming solution. Surely, this would become a reality. Surely, this simple idea would be recognized by others, and implemented.

But alas, the obvious was never realized and because it would be impractical for any organization to expect you to have this aboutme.* file in your back pocket when nobody else had one or was asking for it. It’s the typical chicken / egg scenario; you need one to start the other.

But now I’m inspired again … by Open ID, or possibly another similar centralized authentication mechanism.

When I log into a new site via MyOpenID, I can chose the persona I want revealed to the site I’m logging into for the first time. One of these personas could easily contain standard address information like that required in the types of situations listed previously.

As Open ID reaches critical mass, with more people understanding and adopting it, providing and/or recommending software functionality to accept basic information via an Open ID login will become more realistic.

It’s easy enough to imagine a plausible working process, so I won’t bore you with that. However, there would be serious security concerns regarding logging into a critical authentication mechanism like Open ID from a shared kiosk, so the user would want to log in via their personal cell phone (or laptop or PC or …). And mainstream user adoption has a long way to go before something like this would even be offered, not because of technology, but due to slowly shifting paradigms.

There are obstacles to overcome before this could ever become a reality, but with centralized authentication schemes like Open ID, expecting most people to have an electronic copy of their basic information available will eventually be reasonable, and generic business software applications will start consuming that information.

And one day, hopefully before I die, I won’t have to fill out another one of those stupid forms.

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

How To Write Dynamic SQL AND Prevent SQL Injection Attacks

One of my pet peeves is when general rules are taken as gospel, and declared as the only acceptable practice regardless of the circumstance.

One of the big ones is Dynamic SQL. There’s a heck of a good reason for this, and it’s called an SQL Injection Attack, and if you are not familiar with it, I would strongly urge you to leave this post right now, and read up on it.

Anyway, Dynamic SQL is not inherently evil, it’s the appending of user entered text that is evil. Appending user entered text is just lazy and can be easily avoided with parameterization.

The trick is to create dynamic SQL with parameters.

Interestingly, I’ve never seen anybody else do this. I am constantly hearing people recommending stored procedures … even when are clearly not flexible enough to meet the required functionality. Don’t get me wrong, stored procedures have a lot of benefits, but flexibility isn’t one it’s popular for.

And now for some code …

I created a console app which queries the SQL Server sample database AdventureWorks. The following static method was added to the Program class.

public static int GetOrderCount(string productPrefix, SqlConnection cn)

{

// initialize SQL

string starterSql = "SELECT count(*) FROM Production.Product";

StringBuilder sbSql = new StringBuilder(starterSql);

// add parameters

if( !String.IsNullOrEmpty(productPrefix))

sbSql.Append( " where [name] like @namePrefix");

// initialize the command

SqlCommand cmd = new SqlCommand(sbSql.ToString(), cn);

if (cmd.CommandText.Contains("@namePrefix"))

cmd.Parameters.AddWithValue("@namePrefix", productPrefix + "%");

// get count

return Convert.ToInt32( cmd.ExecuteScalar());

}

Basically, the function queries the number of orders where the product name starts with a certain prefix.

The strength of doing this via dynamic SQL is we only need to filter on the product name when a valid prefix parameter is passed in. So, if the optional parameter (productPrefix) exists and is valid, the filter condition is added to the SQL and the parameter is added to the SqlCommand object.

In this overly simplified example, we could manage the same thing by just setting the productPrefix variable to the ‘%’ wild card, but then we’d be doing a filter for nothing. Not to mention things might be a little more difficult if the operator were ‘equals’ instead of ‘like’, or if there were multiple optional parameters. Creating SQL dynamically means we don’t need to write some funky kludge and our SQL is always nice, simple, and doing minimal work.

To execute my function, I added the following code to the Main(…) method.

// get total count

Console.WriteLine( "There are {0} products in total.",

Program.GetOrderCount( null, cn));

// get totals for various prefixes

string[] prefixes = new string[6] { "a", "b", "c",

"'; drop table Production.Product;--",

"d", "e" };

foreach(string prefix in prefixes)

{

Console.WriteLine("There are {0} products"

+ " prefixed with '{1}'.",

Program.GetOrderCount(prefix, cn), prefix);

}

First we call GetOrderCount(…) without a name prefix to test it without the parameter, then we traverse the array of possible prefixes (this would be the user entered data in a real app). Notice the fourth item? Pretty menacing eh? Don’t worry, it’s safe.

Here are the results

There are 504 products in total.

There are 3 products prefixed with ‘a’.

There are 4 products prefixed with ‘b’.

There are 12 products prefixed with ‘c’.

There are 0 products prefixed with ”; drop table Production.Product;–‘.

There are 3 products prefixed with ‘d’.

There are 9 products prefixed with ‘e’.

Notice the ‘d’ and ‘e’ prefixes were searched, and items found, proving the ‘drop table’ statement was not injected into the command.

You’d be surprised how much I use this. Many of my objects have a static GetList(…) method, and this method usually has multiple overloads. Keeping with the DRY principle, I prefer to keep all my logic in one place, so this method will usually have one overload with every possible filter parameter, and all the other overloads will just call this one. Surprisingly, the overload with the code, is not overly complex, and is actually pretty simple.

What do you think? Will you use parameterized dynamic sql in the future?

Copyright © John MacIntyre 2009, All rights reserved

WARNING – All source code is written to demonstrate the current concept. It may be unsafe and not exactly optimal.

My name is John MacIntyre.

My name is John MacIntyre.